DevOps Predictions for 2022

DevOps had a dream run in the year 2021 and is sure to continue it into 2022. According to ResearchandMarkets, the global DevOps market was estimated at $4.31 billion in 2020 and $5.11 billion in 2021. This value is expected to touch $12.21 billion in 2026, growing at a CAGR of 18.95% between 2021 and 2026.

DevOps had a dream run in the year 2021 and is sure to continue it into 2022. According to ResearchandMarkets, the global DevOps market was estimated at $4.31 billion in 2020 and $5.11 billion in 2021. This value is expected to touch $12.21 billion in 2026, growing at a CAGR of 18.95% between 2021 and 2026.

DevOps is innovating at a rapid pace. As such, organizations should proactively monitor technology changes and reinvent IT strategies accordingly. Here are the top DevOps predictions for 2022.

1) Distributed Cloud Environments

After hybrid and multi-cloud environments, distributed cloud networks are rapidly gaining popularity in recent times. A distributed cloud environment hosts backend services on different cloud networks in different geolocations while offering a single pane to monitor and manage the entire infrastructure as single cloud deployment. It allows you to customize more-performing and responsive service delivery for specific apps while following regulations of local governments. Distributed clouds bring high resilience, prevent data losses and service disruptions as your apps keep running even when servers in one region crash. It means you gain 99.99% uptime. Edge computing can be considered as an extension to distributed cloud networks.

Distributed clouds offer amazing benefits to all industries. For instance, autonomous vehicles can monitor and process sensor data on-board while sending engine and traffic data to the central cloud. Similarly, OTT platforms can leverage ‘Intelligent Caching’ wherein content in multiple formats is cached at different CDNs while transcoding tasks are done at the central cloud. That way, a newly released popular series can be seamlessly streamed to multiple mobile devices in the same region in real-time.

2) Serverless Architecture

Serverless architecture is a cloud-native architectural pattern that enables organizations to build and run applications without worrying about the provisioning and management of server resources in the infrastructure. The cloud provider takes care of the allocation and management of server and machine resources on-demand. The serverless architecture delivers accelerated innovation as apps can be deployed faster and better. Apps can be decomposed with clear observability as independent services that are event-based. As such, organizations can reduce costs and focus more on delivering better UX.

Serverless computing is rapidly innovating. Function as a Service (FaaS) is a new trend based on the serverless architecture that eliminates the need for complex infrastructure to deploy and execute micro-services apps. Another growing trend is hybrid and multi-cloud deployments that deliver enhanced productivity and are cost-effective. Serverless on Kubernetes is another trend that helps organizations run apps everywhere where Kubernetes runs. Kubernetes simplifies the job of developers and operations teams by delivering matured solutions powered by the serverless model. Serverless IoT is another model that brings high scalability, faster time to market while reducing overhead and operational costs in data-driven environments. It is also changing the way how data is secured in serverless environments.

3) DevSecOps

DevSecOps is a DevOps pattern that converts security into a shared responsibility across the application product lifecycle. Earlier, security was handled by an isolated team at the final stage of product development. However, in today’s DevOps era wherein apps are deployed in smaller cycles, security cannot wait for the end any longer. As such, DevSecOps integrates security and compliance into the CI/CD pipeline, making it everyone’s responsibility. The year 2022 is going to see more focus on shifting security towards the left of the CI/CD pipeline.

DevSecOps increases automation and policy-driven security protocols as QA teams perform automated testing to ensure that non-compliance and security vulnerabilities are efficiently combated across the product lifecycle. The design for failure philosophy is going to be reinvented as well.

4) AIOps and MLOps

Today, regardless of the size and nature, every organization is generating huge volumes of data every day. As such, traditional analytics solutions are inefficient in processing this data in real-time. For this reason, artificial intelligence and machine learning algorithms have become mainstream in recent times.

AI and ML data scientists normally work outside version control systems. Now, CI/CD and automatic infrastructure provisioning are applied to AIOps and MLOps as well. It means you can version your algorithms and identify how changes evolve and affect the environment. In case of an error, you can simply revert to an earlier version.

5) Infrastructure as Code (IaC)

Infrastructure as Code is another growing trend that will become mainstream in 2022. Infrastructure as Code (IaC) is a method of managing the complete IT infrastructure via configuration files. Since cloud-native architecture is becoming increasingly popular in recent times, IaC enables organizations to easily automate provisioning and management of IT resources on a cloud-native architecture by defining the runtime infrastructure in machine-readable files. IaC brings consistency in setup and configuration, enhances productivity, minimizes human errors, and increases operational efficiencies while optimizing costs.

GitOps is the new entrant in this space. Leveraging the IaC pattern and Git version control system, GitOps enables you to easily manage the underlying infrastructure as well as Kubernetes instances. When combined, organizations can build self-service and developer-centric infrastructure that offers speed, consistency and traceability.

Leverage the Communication Revolution with VoLTE-enabled PCRF Systems

The network evolution is going through two major shifts in recent times. While the voice services are going over IP, networks are moving to the cloud. VoLTE or Voice over LTE has now become mainstream. VoLTE services allow an enterprise to deliver a better customer experience with a modernized voice service. In addition to SMS and voice calls, VoLTE enables you to deliver high-quality video communication while extending calls to multiple devices with seamless collaboration across a wide range of devices such as laptops, tablets, IoT devices, TVs etc. According to Mordor Intelligence, the VoLTE market earned a revenue of $3.7 billion in 2020. This value is expected to touch $133.57 billion growing at a CAGR of 56.57% between 2021 and 2026.

The network evolution is going through two major shifts in recent times. While the voice services are going over IP, networks are moving to the cloud. VoLTE or Voice over LTE has now become mainstream. VoLTE services allow an enterprise to deliver a better customer experience with a modernized voice service. In addition to SMS and voice calls, VoLTE enables you to deliver high-quality video communication while extending calls to multiple devices with seamless collaboration across a wide range of devices such as laptops, tablets, IoT devices, TVs etc. According to Mordor Intelligence, the VoLTE market earned a revenue of $3.7 billion in 2020. This value is expected to touch $133.57 billion growing at a CAGR of 56.57% between 2021 and 2026.

While VoLTE is revolutionizing the communication segment, service providers are not able to fully leverage this technology owing to legacy PCRF systems. Upgrading the PCRF system is the need of the hour.

An Overview of PCRF

Policy Control and Rules Function (PCRF) is a critical component of a Low-Term Evolution (LTE) network that offers a dynamic control policy to charge mobile subscribers on a per-IP flow and per-subscriber flow basis. It brings the capabilities of earlier 3GPP releases while enhancing them to provide QoS authorization for treating different data flows, ensuring that it is in accordance with user subscription profiles.

The need for VoLTE-enabled PCRF

The majority of service providers are battling with legacy PCRFs that struggle to handle the high scalability, performance and reliability requirements of VoLTE services. When organizations see a new business opportunity, they are not able to tap it owing to BSS policy management challenges. They have to either integrate the new policy management with the legacy system or extend the legacy system to support the new policy. Another option is to manage two PCRFs which is more practical and cost-effective. However, separating subscription traffic is the biggest challenge here. This is why many businesses are not able to tap new opportunities but increase customer churn and revenue losses.

Here are some of the reasons why VoLTE-enabled PCRF is the need of the hour.

Differentiated Voice Service and Support

VoLTE services open up new business opportunities for organizations. For instance, service providers can deliver communication services in a tiered model wherein premium services are charged more. At the same time, you can deliver premium calls with higher quality along with dedicated bearer support. Your PCRF should be robust enough to different call sessions and ensure dedicated voice support for premium subscriptions while being able to monitor and manage separate charges.

Alternate Voice Support

When the customer loses LTE coverage, the call should be routed to alternate voice support via a fall-back mechanism using Single Radio Voice Call Continuity (SRVCC) and Circuit Switch Fallback (CSFB) methods. Legacy PCRF systems are not efficient enough to support both these methods.

Regulatory Compliance

Along with quality voice services, the communications service provider should ensure that safety regulatory measures are adhered to and prioritized as well. For instance, when customers make an emergency call, the PCRF should identify the subscriber location and override current subscription plans to offer QoS prioritization. A modern PCRF will help you do so.

Real-time Policy and Charge Management

With a variety of monetization opportunities available for enterprises, policy control along with a real-time subscription monitoring system is the need of the hour. While a VoLTE session is running, businesses can sell another video streaming product or upgrade the subscription for a temporary period. The PCRF should be able to monitor changes in plans in real-time for policy control and charges management.

As the communication segment is going through the VoLTE revolution, it is important for businesses to ensure that the PCRF is VoLTE-enabled. Failing to do so will keep your business out of competition within a quick time.

CloudTern is a leading provider of communications solutions. Contact us right now to transform your legacy PCRF systems into robust VoLTE-enabled PCRF solutions!

Native App Vs Hybrid App – What to Choose?

Mobile apps are increasingly being developed in recent times. The reason is simple. There are 4.4 billion mobile users globally as reported by DealSunny. Each hour people make 68 million Google searches generating $3 million revenues, 8 million purchases on Paypal, open 2 billion emails, send 768 million text messages and 1 billion WhatsApp messages. As such, businesses are quickly leveraging this mobile revolution to stay ahead of the competition. Companies build mobile apps to provide a superior customer experience, tap into new markets, engage with customers, boost sales and be competitive.

Mobile apps are increasingly being developed in recent times. The reason is simple. There are 4.4 billion mobile users globally as reported by DealSunny. Each hour people make 68 million Google searches generating $3 million revenues, 8 million purchases on Paypal, open 2 billion emails, send 768 million text messages and 1 billion WhatsApp messages. As such, businesses are quickly leveraging this mobile revolution to stay ahead of the competition. Companies build mobile apps to provide a superior customer experience, tap into new markets, engage with customers, boost sales and be competitive.

One of the important challenges while building a mobile is choosing between native and hybrid app development models. While both app types come with pros and cons, your product goals and business objectives should decide the type of app best suited for your organization. Here is a comparison of two mobile app types.

Native App Vs Hybrid App: Overview

A native app is built for a specific platform and OS and uses a special programming language compatible with the platform. While building a native app, developers use Integrated Development Environment (IDE), SDK, interface elements and development tools related to that platform. For instance, a native app for iOS is written using Objective-C or Swift while a native app for Android devices is written in JavaScript.

A hybrid app is platform-agnostic and OS-agnostic which means you can run it on iOS, Android, Windows and other platforms. Hybrid apps are built using HTML5, CSS, and JavaScript. A hybrid app is actually a web app that is wrapped with a native interface.

Native App Vs Hybrid: Development

Developing native apps takes a long time and is expensive when compared to a hybrid app. To build an iOS app, developers use Swift or Objective-C. Similarly, JavaScript or Kotlin is used to build native Android apps. It gives them full access to the full-featured set and OS functionality. However, developers should have expert knowledge of the programming language to manage OS components. Moreover, you have to write different code bases for iOS and Android platforms.

When it comes to hybrid apps, development is easy as you can use a single code base to run on multiple platforms. The backend is developed using JavaScript, HTML and CSS and the front end comes with a native shell wrapper that is downloaded onto the user machine via a webview. Hybrid apps don’t need a web browser. They can access device hardware and APIs. However, they have to depend on a 3rd party for the native wrapper. Being dependant on frameworks and libraries such as Ionic or Cordova, hybrid apps should always be maintained in perfect sync with platform updates and releases.

Native App Vs Hybrid: Performance

When it comes to performance, Native apps have an edge as they are built specifically for the platform. They are easy to use and deliver faster performance. They seamlessly integrate with the native environment to access tools such as camera, mic, calendar, clock etc. to deliver superior performance. The native platform gives assurance of the quality, security and compatibility with the platform of the native apps. On the other hand, hybrid apps are not built for a specific OS which means they are slow. The speed and performance of a hybrid app depend on the speed of the internet connection of the user’s web browser. It means the performance cannot beat native apps.

Native App Vs Hybrid: User Experience

When it comes to user experience, Native apps deliver a great user experience as they perfectly blend with the branding and interface of the platform. Developers get the luxury of designing an app that fully matches the interface of the platform following specific UI guidelines and standards. They can run offline and online. On the other hand, hybrid apps are not optimized for UI/UX designs as they don’t target a specific OS, platform or group of users.

Native App Vs Hybrid APP: Cost

Building a native app is more expensing compared to a hybrid app as you have to create separate codebases for each platform. For instance, if you create an app for iOS using Swift, it will not work on an Android mobile. It means you have to rewrite the same app using JavaScript or Kotlin that adds up to the initial costs. Moreover, updates and maintenance tasks require additional budgets. Releasing the same features on iOS and Android platforms at the same time is a challenge as releasing cycles and updates are different for both platforms.

Another challenge is that you require diverse skillsets to build and manage multiple versions of the same app. For instance, Swift developers might not have the same level of expertise with Kotlin. You have to hire more developers for the job. All these aspects add up to development time, costs and complexities. Hybrid apps are quick to build and deploy and are cost-effective. Maintenance is easy as well. However, compatibility issues between the device and the OS might creep up in the long run.

Native App Vs Hybrid App: Which one to choose?

Hybrid apps are easy to build and manage while being cost-effective. If you have less time to market, you can quickly build a hybrid app. With customer experience becoming important for businesses in recent times, delivering a superior user experience is always recommended. As such, user experience should be the primary aspect while choosing an app development model. Native apps help you to deliver great UI/UX designs.

Digital Transformation in Healthcare – Everything You Need to Know

The entire business world is going through a digital transformation. While organizations that were encouraged by the benefits offered by digital technologies embarked on this journey first, others were forced to move digital, owing to the pandemic that brought the unexpected lockdown. While the healthcare segment was slow to adopt digital technologies owing to the lack of expertise to decide on where to invest and how to invest, the recent trends reveal that healthcare institutions are now aggressively embracing digital transformation.

What is Digital Transformation in Healthcare?

Digital transformation in healthcare is about implementing digital technologies to improve healthcare operations, increase patient experience while making healthcare cost-effective and accessible to everywhere, on-demand. Right from online appointments to managing EHRs and medicine reports to integrating various departments for seamless coordination, digital transformation makes healthcare services efficient, easy to use and accessible everywhere.

Digital transformation in healthcare is about implementing digital technologies to improve healthcare operations, increase patient experience while making healthcare cost-effective and accessible to everywhere, on-demand. Right from online appointments to managing EHRs and medicine reports to integrating various departments for seamless coordination, digital transformation makes healthcare services efficient, easy to use and accessible everywhere.

According to Global Market Insights, the global digital healthcare market was valued at $141.8 billion in 2020. This value is expected to grow at a CAGR of 17.4% between 2021 and 2028. Similarly, Grand View Research reports that the global healthcare market earned a revenue of $96.5 billion in 2020 and is expected to grow at a CAGR of 15.1% during the period 2021-2028. These numbers speak volumes of the growing popularity of digital transformation in recent times.

How Digital Transformation Helps Healthcare?

Digital transformation is not a silver bullet that can simply transform existing healthcare institutions. It requires proper planning and implementation. Organizations that have rightly implemented digital technologies are reaping the following benefits:

Centralized Data Management Systems

Gone are the days when patients had to wait in long queues to meet a doctor, undergo tests/scans and return to join the long queues for treatment. With digital technologies incorporated across the organization, patients can now schedule an appointment from the comfort of their homes and get treatment at their convenience. With a single digital ID, doctors can pull out the records of the patient and check out the illness history. Similarly, the diagnostics department staff can retrieve the patient details and update them with the test reports so that the doctor can prescribe the right medicine which is then passed on to the pharmacy wing. With a centralized data management system, concerned people across the healthcare can access the required patient information and deliver quality care. Patient care becomes quick, easy and accessible for everyone.

Patient Portals

A patient portal is an intuitive online healthcare platform that enables patients to access their medical records, communicate with healthcare professionals, receive telemedicine etc. It enables them to access the data from anywhere, any location on-demand and share the test reports and case histories with multiple healthcare providers, gaining better control over the treatment.

Virtual Treatment / Video Call

Today, patients don’t have to visit a healthcare professional for regular sicknesses. Instead, they can contact a medical practitioner via a video call and get their illness treated over the phone. Whether you are in the office, at home or on the road, it is a breeze to search for a healthcare professional and communicate with them on a video call. It is especially useful in rural areas wherein healthcare services are scarce. It means digital transformation extends healthcare to most rural parts of the country. Virtual treatment has helped several patients during the time of Covid. While these options don’t negate direct visitations, they help you in times of health emergencies.

Wearable Technology

Wearable medical devices are on the rise in recent times. With the help of wearable devices, patients can keep track of high-risk conditions and prevent a health upset. For instance, you can monitor heartbeats, sweat, pulse rate, oxygen levels etc. using a wearable device and instantly contact emergency support in case of an emergency. The device can automatically send alerts to your prescribed contacts in case of unusual health metrics. Not only does it prevent a health event but it also saves high medical expenses.

Healthcare / Wellness Apps

Using digital technologies, healthcare professionals can design healthcare or wellness apps that enable patients to track and manage their health from the comfort of their homes. For instance, you can use a wellness app to receive recommendations on food and nutrition. Similarly, you can get mental health counselling from trained and experienced professionals on-demand. There are beauty care apps that can help you to manage your skin for acne, allergy or other issues. Similarly, some apps track your sugar levels, eye health etc.

As healthcare is aggressively moving towards digital transformation, designing the right digital strategy with the right technology stack is the key to fully leveraging this revolution.

Contact CloudTern right now to embark on the digital transformation journey!

Top 10 Critical Questions You Should Ask While Choosing a Cloud Computing Provider

Here is a popular joke about the increasing popularity of cloud computing technologies in recent times.

Here is a popular joke about the increasing popularity of cloud computing technologies in recent times.

Today, cloud computing has become so popular that almost every IT resource is being moved to the cloud and delivered over the Internet via a pay-per-use model.

However, cloud computing is not a silver bullet. You can’t just click a button to make everything cloud-enabled.

To fully leverage the cloud revolution, it is important to identify your cloud computing needs and design the right cloud strategy. Choosing the right cloud computing provider is the key here.

Here are the top 10 questions to ask your cloud computing provider before hiring one.

1) Services Portfolio

Before moving to the cloud, organizations should identify their cloud computing needs and document the requirements. Once you have this document ready, the first and foremost question to ask your cloud provider is about their portfolio offerings. What are the cloud services they offer? If they don’t offer the services required by your company, there is no point in further negotiations with the company. You can delete it and move with other companies in the list.

2) Subscription Models

Another important question to ask your cloud service provider is about how they charge for the services offered and how flexible is their payment structure. The cheapest services should not be the first choice. While the price is an important factor, align it with the services to make a decision. While most cloud services are normally offered via a pay-per-use model, the charges differ based on the instances, servers, users, groups, regions etc. In addition, check out the payment period – monthly, quarterly, annually etc.

3) Cloud Security

One of the main barriers to cloud adoption for many organizations is data security. As such, check out the security policies and cyber security measures implemented by the company. Multi-factor authenticating (MFA) is not an option anymore. So, check out if they offer a multi-factor authentication system? In addition, intrusion detection, data encryption, incident prevention mechanism, firewalls and visibility into network security are some of the key requirements to consider.

4) Data Storage Location

The location of the datacenter can affect the performance and reliability of your applications. Choosing a datacenter closer to your business operations will give you an added advantage. As such, ask the cloud provider about where they store your data and what security policies they have in place.

Does it have a fall-back center to handle natural and accidental disasters? Another reason to know the datacenter location is that companies are required to comply with data regulations of their regions. So, it is important to know the data storage location for audit and compliance purposes too.

5) Service-Level Agreements (SLAs)

Before subscribing to a cloud service provider, it is important to define your expectations related to their services. So, check out how they measure the services and how they compensate for service outages. Going through their SLA agreement will help you in this regard.

6) Flexibility in Services

One of the biggest advantages of cloud solutions is the flexibility it offers in adding or terminating services on-demand. So, check out with the provider if you can instantly add or modify services on the go and how easy it is to make changes to your services. For instance, short-term projects require short-term resources on-demand. It will help your team to experiment with new ideas. In addition, check out if you can scale up and scale out resources without downtimes. If an autoscaling feature is available, that would be great.

7) Customer Support 24/7

Regardless of how good a cloud company is, there will be times you might experience a service outage or other technical issues. In such instances, you need a support system that can instantly resolve your issue. So, check out with the cloud provider if they offer customer support that is available 24/7/365 as you need the support service on holidays and weekends as well. In addition, find out the available support options such as phone support, chatbot service, email etc.

8) The History of Downtimes

While no cloud company can guarantee 100% uptime, the best cloud provider should be able to quickly resolve technical issues and minimize downtimes. So, check out the downtime history of the company and what steps they have taken to get things back on track. You can also check out these details on their website and review sites to assess the availability of their services. You don’t want to join hands with a company that has frequent outages.

9) Data Control

While using a cloud service, the cloud provider takes care of the infrastructure while you focus on your business operations. However, it is important to know how the data is handled and what type of control you have over the data. Would you be able to retrieve all your data without the assistance of the provider in case you want to change the provider or terminate the services? In addition, it is important to know how long they will store the data after the service agreement comes to an end. What type of data formats are available is another aspect to check out.

10) Does the company make timely backups?

Data backup and recovery is key to safeguarding your business information. So, check out if the company performs timely backups so that you can restore a recent backup when the data is lost or erased. In addition, check out their disaster recovery plan. Do you have recovery measures in place to instantly recover data or prevent a disaster to happen? A cloud computing company without a DR plan cannot be trusted.

The cloud market is flooded with multiple cloud service providers. So, it is important to eliminate companies that are inefficient, incompatible and unreliable. In addition to asking the above questions, you need to check out the reputation of the company, their references, feedback on review sites and social media platforms etc. Taking time for these tasks will save your business from incurring huge losses in the long run.

DevOps for Business Intelligence

DevOps started off as a methodology that integrates Developers and Operations teams to work in tandem in software development projects. It facilitates seamless coordination and communication between teams, reduces time from idea to market and significantly improves operational efficiencies while optimizing costs. Today, DevOps has rapidly evolved to include several other entities of IT systems. A new addition is Business intelligence.

DevOps jelled well with Big Data as both methodologies are contemporary and complement each other in managing of massive volumes of live data moving between development and production that is maintained relevant via seamless coordination between teams. When it comes to business intelligence, data warehousing and analytics are two important components that need to be managed. As BI deals with batches of data, it doesn’t easily integrate with the DevOps environment by default.

Managing Data Warehousing with DevOps

A data warehouse is a central data repository that collects data from various disparate data sources in and outside the organization and hosts them in a central location allowing authorized people and reporting and analytics tools to access it from any location. Managing a robust and sophisticated data warehouse is a challenge as multiple stakeholders are involved in making a change which makes deployments rather slow and time-consuming. Implementing DevOps here can be a revolutionary thing as you can combine data administration teams and data engineering teams to collaborate on data projects. While a data engineer informs potential features that are being introduced to the system, the data administrator can envisage production challenges and make changes accordingly. With cross-functional teams and automated testing in place, production issues can be eliminated. Together, they can build a powerful automation pipeline that comprises data source analysis, testing, documentation, deployment etc.

However, introducing DevOps for data warehouse management is not a cakewalk. For instance, you cannot simply backup data and revert to the backup as and when required. When you revert to a last week’s backup, what about the changes made to the data by several applications?

DevOps for Analytics

The analytics industry is going through a transformation as well. Contrary to the traditional analytics environment that uses a single business intelligence solution for all IT needs, modern businesses implement multiple BI tools for different analytical purposes. The complexity is that all these BI tools share data between them and there is no central management of BI tools. Another issue is that data scientists design models and algorithms for specific data sets to gain deeper insights and offer predictions. However, when these data sets are deployed to the production environment, they serve a temporary purpose. As data sets outgrow, they become irrelevant which means continuous monitoring and improvement is required. The rate at which the data drifting happens is enormous and traditional analytics solutions are inefficient to manage this speed and diversity. This is where DevOps comes to the rescue.

DevOps helps businesses integrate data flow designs and operations to automate and monitor data enabling them to deliver better applications faster. Automation enables organizations to build high performing and reliable build-deploy iterative data pipelines for improving data quality, accelerate delivery and reduce labor and operational costs. Monitoring data for health, speed and consumption-ready status enable organizations to reduce blindness and eliminate performance issues. It means a reliable feedback loop is created that covers data health, privacy and data delivery for ensuring smooth flow of operations for planned as well as unexpected changes.

The Bottom Line

Bringing DevOps into the BI realm is not an easy task as BI environments are not suitably designed for DevOps. However, businesses are now exploring this option. Bringing DevOps into the BI segment gives situational awareness to businesses as they can make informed decisions when they gain insights into relevant data added from multiple sources. Moreover, it brings great collaboration between teams, allows better integration between different application layers while helping businesses to explore and quickly tap into new markets. Most importantly, it makes your business future-proof.

Top 5 Advantages of using Docker

As businesses are aggressively moving workloads to cloud environments, containerization is turning out to be a necessity for every business in recent times.

As businesses are aggressively moving workloads to cloud environments, containerization is turning out to be a necessity for every business in recent times.

Containerization enables organizations to virtualize the operating system and deploy applications in isolated spaces called containers packed with all libraries, dependencies, configuration files etc.

The container market is rapidly evolving. According to MarketsandMarkets, the global application containerization market earned a revenue of $1.2 billion in 2018 and is expected to touch $4.98 billion by 2023, growing at a CAGR of 32.9% during 2018 and 2023.

The Dominance of Docker

The containerization market is dominated by Docker. In fact, it was Docker that made the containerization concept popular. According to Docker, the company hosts 7 million+ applications with 13 billion+ monthly image downloads and 11 million+ developers involved in the process. Adobe, Netflix, PayPal, Splunk, Verizon are some of the enterprises that use Docker.

Virtual Machine Vs Docker

Here are the top 5 benefits of using Docker:

1) Consistent Environment

Consistency is a key benefit of Docker wherein developers run an application in a consistent environment right from design and development to production and maintenance. As such, the application behaves the same way in different environments, eliminating production issues. With predictable environments in place, your developers spend more time on introducing quality features to the application instead of debugging errors and resolving configuration/compatibility issues.

2) Speed and Agility

Speed and agility is another key benefit of Docker. It allows you to instantly create containers for every process and deploy them in seconds. As you don’t have to boot the OS, the process is done lightning fast. Moreover, you can instantly create, destroy, stop or start a container with ease. By simply creating a configuration file using YAML, you can automate deployment and scale the infrastructure at ease.

Docker increases the speed and efficiency of your CI/CD pipeline as you can create a container image and use it across the pipeline while running non-dependant tasks in parallel. It brings faster time to market and increases productivity as well. The ability to commit changes and version-control Docker images enable you to instantly roll back to an earlier version in case a new change breaks the environment.

3) Efficiently Management of Multi-Cloud Environments

Multi-cloud environments are gaining popularity in recent times. In a multi-cloud environment, each cloud comes with different configurations, policies and processes and are managed using different infrastructure management tools. However, Docker containers can be moved across any environment. For instance, you can run a container in an AWS EC2 instance and then seamlessly move it to a Google Cloud Platform environment with ease. However, keep in mind that data inside the container is permanently destroyed once the container is destroyed. So, ensure that you back up the required data.

4) Security

Docker environments are highly secure. Applications that are running in Docker containers are isolated from each other wherein one container cannot check the processes running in another container. Similarly, each container possesses its own resources and doesn’t interact with the resources of other containers. They use the resources allocated to them. As such, you gain more control over the traffic flow. When the application reaches its end of life, you can simply delete its container, making a clean app removal.

5) Optimized Costs

While features and performance are key considerations of any IT product, Return on Investment (ROI) cannot be ignored. The good thing with Docker is that it enables you to significantly reduce infrastructure costs. Right from employee strength to server costs, Docker enables you to run applications at minimal costs when compared with VMs and other technologies. With smaller engineering teams and reduced infrastructure costs, you can significantly save on operational costs and increase your ROI.

Top 10 Benefits of AWS in 2021

Technology is changing rapidly every year. The year 2021 is no different. However, one thing that remains constant here is the position of AWS in the public cloud infrastructure segment. AWS has been a leader in this segment since its advent.

Technology is changing rapidly every year. The year 2021 is no different. However, one thing that remains constant here is the position of AWS in the public cloud infrastructure segment. AWS has been a leader in this segment since its advent.

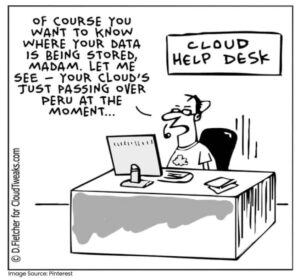

According to Statista, AWS accounted for a market share of 32% in Q1 2021 earning revenues of $39 billion which is a 37% increase from Q1 2020. Azure and Google Cloud Platform recorded a market share of 20% and 9% respectively.

Here are the top 10 benefits offered by AWS in 2021:

1) Access to a World-class Technology Stack

Not every business has the luxury of laying hands on a world-class technology stack, owing to budget constraints and the lack of expert staff. Thanks to the AWS cloud, today, even small and medium businesses have access to cutting-edge technologies. It brings all players onto the same platform creating equal opportunities for everyone. Now, small and medium businesses can compete with enterprise solutions.

2) Always Innovating

Innovation is a key component of AWS offerings. The AWS team is committed to constantly driving innovation into the cloud infrastructure offering. This is one of the main reasons why top brands use AWS. Though Azure and GCP can compete with AWS in the pricing structure, innovation is what keeps AWS two steps away from its competitors. Being an AWS customer, you’re assured of cutting-edge technologies at cost-effective prices.

3) Always Economic

While AWS offers cutting-edge technologies, it manages to maintain an affordable pricing structure. As you only pay for the resources consumed without making any upfront commitments or long-term contracts, costs are predictable and economic as well. You can visit the AWS Economics Center to know about how organizations are optimizing resources and saving costs. According to a Cloud Value Benchmarking study, on average, businesses have saved 27.4% reduction per user, 57.9% increase in VM managed per user, 37.1% decrease in time to market new features and 56.7% decrease in downtime. All these aspects add up to your savings. AWS offers a calculator to keep track of all your cloud expenses.

4) Highly Flexible

One of the biggest advantages of AWS is its flexibility which allows you to customize your technology stack. Be it a programming language, operating system, database or web application platform, you can pick and choose your stack and easily load them into the virtual environment offered by AWS. Similarly, you can choose an out-of-box platform or customize and configure the entire stack from scratch.

5) Easy to Use

AWS solutions are designed with ease of use in mind. Whether you are a novice user or a technology expert, AWS makes it easy to move your applications to the cloud. You can take advantage of the AWS console to access the web application platform. Alternatively, you can use the web services APIs to do so. AWS offers extensive documentation on how to use these web services APIs making your job easy and fast.

6) Security at its Best

Security and better control over the datacenter were the two important barriers to cloud adoption for a long time. However, AWS takes security pretty seriously. AWS security is based on a shared model wherein AWS controls the security on the cloud infrastructure while the customer handles security at the customer endpoint. Data is distributed across multiple datacenters making it resilient, faster to access and quick to recover from a disaster. All datacenters are secured with end-to-end protection. The company uses firewalls to ensure data is protected and encrypted while moving across endpoints. It offers the Identity and Access Management feature wherein users are provided with role-based access controls. Multi-factor authentication is available too.

7) Scale at your Pace

Taking advantage of the massive infrastructure and the pay-per-use model, you can start small and scale at your own pace. AWS offers Elastic Load Balancing and Auto Scaling features that enable you to automatically scale resources as per traffic surges. Automation of Horizontal scaling comes out of the box. For automating vertical scaling, you need to configure AWS Ops Automator V2.

8) Comprehensive Cloud Solutions

With AWS, you don’t have to look in other directions. AWS is a single-stop solution for all your cloud infrastructure needs. It offers a wide range of tools and services. With datacenters located in 190 countries, you can scale globally. In addition to its massive infrastructure, AWS has a wide partner network that helps you with required tools for every cloud need, right from migrating to the cloud and developing in the cloud to optimizing cloud operations and managing workloads.

9) Extensive Support

While AWS solutions are easy to use, the company offers extensive documentation and support when it comes to walking you through the installation or configuration of tools and services. AWS website contains documentation, user guides, videos, forums and blogs to help you with the stuff. You can take advantage of the vibrant community as well.

10) The Brand Matters

Along with all the above mentioned, the brand value matters too. AWS is the leader in the cloud infrastructure segment and delivers cutting-edge solutions. When you subscribe to AWS solutions, it means your business operations are powered by world-class technologies that are second to none. So, it gives a big boost to your operational efficiencies and increases trust among customers.

How DevOps helps your Company to Grow?

When DevOps arrived onto the technology platform, industry experts opined that it is going to revolutionize the IT world. However, businesses were slow to embrace this methodology. The reason was that many businesses could not understand what DevOps is actually about. As it is not a tool or a technology, people derive their own definitions, processes and methods. DevOps is a methodology that integrates Development and Operations teams to work as a single entity right through the product lifecycle to deliver quality software faster. According to Research Dive, the global DevOps market was valued at $4.46 billion in 2020. This value is expected to reach $23.36 billion by 2027, growing at a CAGR of 22.9% during 2020-2027.

Here is how DevOps helps your company to grow:

Redefining Organizational Culture

While DevOps started off as a combination of development and operations teams, it has now evolved to include everyone involved in the product lifecycle. DevOps brings cross-functional teams into the picture comprising people from design, development, QA, operations, security etc. It facilitates seamless collaboration and trust between teams, breaking silos. With rightly aligned priorities and shared goals, every member of the team gains clear visibility into the progress of the project, resulting in a quality product delivered in time. It gives you better control over tools, processes and projects.

Redefining Technical Processes

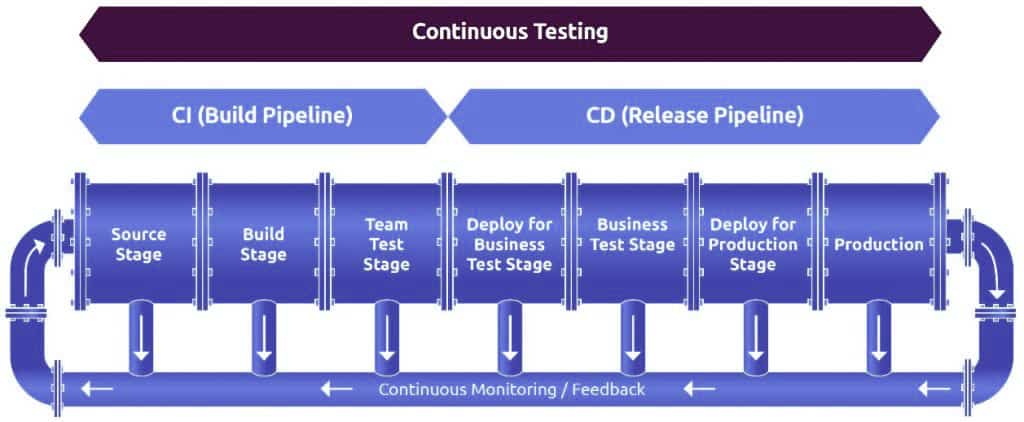

Faster time to market is a key requirement to stay in the competition in today’s fast-paced world. However, speed shouldn’t kill the quality. DevOps allows you to deliver faster while not compromising on quality. Continuous Integration / Continuous Delivery (CI/CD) is a notable feature of DevOps.

In a traditional waterfall software development model, developers write the code first which is then sent to the testing team. If there are errors, the code is returned to developers for corrections. When the code successfully passes the test, it is sent to the staging environment and then deployed to production. To deliver an update, the product has to go through the entire process again.

With the advent of Microservices and agile methodologies, developers started developing the software as modular independent services in smaller and incremental cycles. DevOps helps businesses to manage microservices, SOA and agile environments in a better way. It integrates different disparate systems to work as a cohesive unit. It allows you to build a CI/CD pipeline and automate the entire process.

In this CI/CD pipeline, coders write the code and commit it to a version control system that acts as a central repository. When a change is detected, the CI server automatically runs the builds. Passed builds are moved to the deployment segment or the image repository. The automation deployment tool picks the artifacts from there are deploys them to production. There is a continuous monitoring tool that offers feedback from which you can gain clear insights into the performance of the product. By using value stream mapping, you can quickly identify bottlenecks and optimize every process. Response times get quicker too. With continuous integration, continuous testing, continuous deployment and continuous feedback, DevOps enables you to quickly deliver quality software.

Jenkins, Gitlab, CircleCI, TeamCity and Bamboo are some of the best CI tools that help you to automate and orchestrate the entire software development product lifecycle.

Redefining Business Processes

DevOps brings a cultural shift across the organization. Now, developers understand the challenges faced by the Ops guys and develop the code accordingly. Similarly, operations guys are aware of how the code is being developed and how it performs in production at an early stage. As each member is responsible for the overall quality of the product, every team equally cares for the efficient execution of tasks of other teams. They motivate and encourage other members wherever possible. With a cross-functional team working together, employees are cross-trained and up-skilled as well. It not only brings more value to the organization but also delivers more value to your customers.

Innovation is a key requirement to stay ahead of the competition. DevOps gives developers extra time to experiment and create new products or tweak existing products. With automated testing and automated security built into the pipeline, you don’t have to worry about breaking anything. Without disrupting the project, developers can validate the feasibility of ideas and introduce innovation into business processes. It also helps them learn customer requirements and user experiences in a better way and meet/exceed their expectations. Enhanced customer satisfaction helps you retain your customers and gain new referrals too.

The Bottom Line

DevOps benefits are equally distributed across the business, technical or cultural segments of an organization. A good DevOps strategy helps an organization sustainably grow in all aspects of the business. However, streamlining an end-to-end delivery pipeline is a challenge. Once the right DevOps strategy is designed, you can fully leverage all these benefits.

The key here is choosing the right DevOps partner!

Cloud Development – All You Need to Know

In today’s cloud era, every IT resource is hosted in the cloud and delivered over the Internet via a pay-per-use subscription model. While the amazing benefits offered by the cloud have inspired the software industry to embrace cloud development, the recent pandemic has forced everyone to move towards the digital world. As work-from-home environments are here to stay, cloud development has now become more important than ever.

What is Cloud Development?

Cloud development is about developing software applications using computing infrastructure that is hosted in the cloud. Instead of building and managing your servers and physical hardware, you can access technology services hosted in the cloud via a pay-per-use model.

Cloud development is about developing software applications using computing infrastructure that is hosted in the cloud. Instead of building and managing your servers and physical hardware, you can access technology services hosted in the cloud via a pay-per-use model.

Right from servers, data storage and network resources to OS, Middleware and Run-time environment, everything can be accessed over the Internet.

In addition, you gain access to ready-made cloud solutions for monitoring, analytics etc. Amazon is a leading provider of public cloud solutions while VMware tops the private cloud services segment.

Cloud-based apps are highly scalable and flexible. They can be accessed from any device, any location using APIs. The app data is stored in the cloud and resides on the user’s device as a cache enabling the user to work offline. When the app is connected online, the cache data gets updated. Google Docs, Evernote, Canva, Dropbox are a few popular examples of cloud-based apps.

Why is Cloud Development Popular?

Cloud development offers amazing benefits to organizations. Firstly, it eliminates the need to invest heavily in on-premise infrastructure and licensing costs. With a pay-per-use model, you can only pay for the services used. It gives you access to cutting-edge technologies at cost-effective prices. Secondly, cloud development facilitates seamless collaboration between teams located in different geo-locations. Thirdly, cloud development offers high scalability, flexibility, unlimited storage, faster time to market and automatic recovery options. In addition, it automatically updates the software and performs maintenance which means you can focus on building quality software products.

Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform are the leading providers of cloud development solutions.

Web Apps are not Cloud Apps

Often, cloud development is confused with web development. It is important to understand that all cloud apps are web apps but all web apps are not cloud apps. To be precise, cloud-based apps are an advanced version of web-based apps. Web-based apps depend on browsers and scalability and customization is limited. The data is stored on a single data server. You can’t work offline with web-based apps. On the other hand, cloud apps are highly scalable and customizable. The data is stored across multiple data-centers using replication techniques and you can work offline as well.

Cloud Development Challenges

While cloud development seems a great option, it comes with certain challenges. Cloud development demands seamless collaboration between different teams such as developers, designers, QA managers, data analysts, DevOps engineers etc. The project involves different cloud technologies such as AWS, Azure, GCP etc. So, people working on cloud apps should have good knowledge about various platforms and be able to integrate apps with different services using APIs.

Secondly, cloud development brings security concerns. As such, security should be implemented right into the CI/CD pipeline so that it can become a part of automation. It is recommended to separate app data from app architecture code. Thirdly, cloud development offers higher scalability. However, scalability that is not optimized will overrun your cloud budgets. As such, it is important to have clear network visibility to gain better control over cloud infrastructure.

Conclusion

Mobility solutions, disaster recovery, Flexibility and reduced workloads are some of the key drivers to cloud adoption. However, cloud development requires expert knowledge of cloud technologies. When rightly implemented, cloud development improves your operational efficiencies and optimizes costs while improving user experience.