The Impact of Generative AI on Real Estate Document Processing

How AI is Automating Real Estate Paperwork?

AI is transforming the real estate industry by automating paperwork handling through intelligent document processing (IDP). This technology utilizes AI, machine learning, and natural language processing to streamline the management of documents such as contracts, lease agreements, and property records. By automating these tasks, IDP enhances efficiency and accuracy, significantly reducing the manual effort required for document management.

The IDP process begins with document ingestion, where files are captured from various sources like emails, scanners, or cloud storage and converted into digital formats if necessary. Preprocessing follows, which includes noise reduction, image correction, and text extraction to prepare the documents for accurate data extraction. During the data extraction phase, AI algorithms analyze the content to identify and pull out specific information such as names, dates, and contract terms, using natural language processing to understand the text’s context.

Once the data is extracted, it undergoes validation to ensure accuracy by cross-referencing with existing databases or predefined rules. The validated data is then classified into relevant categories, such as lease agreements or property sale contracts. Finally, the classified data is integrated into systems or databases, such as property management or CRM platforms. A review stage, involving human oversight, ensures data accuracy and provides feedback to refine AI models, resulting in a more efficient and reliable document processing system for real estate professionals.

AI’s Role in Streamlining Real Estate Transactions

AI is transforming the real estate industry by boosting efficiency and lowering costs. By automating crucial tasks like document management, contract review, and compliance checks, AI reduces the need for manual effort and minimizes errors. This acceleration in processing speeds up transactions, allowing both real estate professionals and clients to finalize deals more swiftly and accurately. With AI handling these routine tasks, agents can concentrate on building client relationships and strategic planning, ensuring a more seamless transaction experience.

The financial benefits of AI in real estate are significant. Automation cuts down on the need for extensive human resources, which can otherwise lead to higher operational costs. By streamlining workflows and speeding up decision-making, AI enables real estate firms to manage transactions more efficiently. This results in substantial savings on administrative expenses and allows businesses to use their resources more effectively, enhancing their bottom line.

Additionally, AI’s ability to process information rapidly leads to faster deal closures and improved cash flow. By reducing manual intervention and optimizing workflows, AI helps real estate firms close transactions more quickly, resulting in timely revenue generation. This efficiency decreases operational costs and provides a competitive edge, allowing businesses to stay ahead in a dynamic market. Adopting AI technology equips real estate firms to navigate industry changes with agility and precision.

Data Security in Automated Document Processing with AI

In this real estate sector, AI-driven automated document processing enhances both efficiency and data security. By integrating advanced encryption and secure access controls, AI systems protect sensitive information, such as contracts and client records, from unauthorized access and breaches. This robust security framework ensures the confidentiality of data and builds trust with clients.

AI also boosts security through continuous monitoring of anomalies and potential threats. This real-time threat detection provides an extra layer of protection, allowing real estate professionals to manage documents efficiently while maintaining high standards of data security. With AI, businesses can streamline operations without compromising on data integrity.

Reducing Errors: The Accuracy of AI-Driven Documentation

AI-driven documentation is revolutionizing the real estate sector by significantly reducing errors in paperwork. Traditional methods often involve manual data entry and review, which can lead to mistakes, delays, and costly disputes. However, AI systems, equipped with machine learning algorithms, can automatically extract, analyze, and verify large volumes of data with a high degree of accuracy. This not only eliminates the risk of human error but also ensures compliance with legal standards, which is critical in real estate transactions. As a result, real estate professionals can experience smoother and faster approval processes.

Moreover, AI’s ability to review documents in real-time allows for the instant identification of inconsistencies and omissions that could otherwise be overlooked. By flagging potential issues early, AI solutions minimize the need for time-consuming corrections and reduce the chance of legal complications down the line. This enhanced accuracy and efficiency lead to greater trust between stakeholders, allowing agents, buyers, and sellers to focus on the deal rather than on documentation errors, ultimately improving the overall real estate transaction experience.

AI-Powered Document Review: Faster Approvals and Compliance Checks

AI-powered document review is transforming the real estate sector by automating critical tasks such as contract verification and compliance checks. Using advanced machine learning algorithms, AI swiftly identifies errors, missing information, and inconsistencies within documents, ensuring they meet regulatory standards. This not only reduces the time required for approvals but also minimizes the chances of human error, making transactions more efficient. Real estate professionals benefit from the speed and accuracy of AI, allowing them to close deals faster. As a result, the process becomes more streamlined, improving productivity across the board.

In addition to speeding up approvals, AI significantly reduces legal risks by ensuring compliance with the latest regulations. It continuously monitors changes in laws and industry standards, automatically updating document checks to reflect new requirements. This consistency helps both buyers and sellers avoid potential legal issues, fostering greater trust and transparency in real estate transactions. Moreover, AI’s ability to reduce manual labor leads to cost savings, making operations more efficient. As a result, AI is becoming a crucial asset in the modern real estate landscape.

The Future of Real Estate: Generative AI and Seamless Property Deals

Generative AI is set to transform the real estate industry by streamlining property transactions. AI-powered platforms can analyze vast data, helping buyers and sellers make quick, informed decisions. Virtual property tours, market trend predictions, and accurate valuations allow investors and agents to strategize more efficiently.

AI also enhances transaction security by automating documentation and reducing fraud. It speeds up the process while delivering personalized recommendations, creating a more seamless, transparent, and efficient property deal experience. The future of real estate, powered by AI, is more efficient and customer-centric than ever.

AI Innovation with AWS Infrastructure and Managed Services

Artificial Intelligence (AI) is transforming industries at an unprecedented pace, and Amazon Web Services (AWS) is at the forefront of this revolution. With its robust infrastructure and comprehensive managed services, AWS empowers businesses to innovate rapidly, scale effortlessly, and achieve their AI goals with precision. From startups to enterprise giants, AWS provides the essential tools and foundational models that fuel AI development, making complex processes more accessible and efficient.

AWS’s AI infrastructure offers a suite of powerful services, including pre-trained foundational models that can be seamlessly integrated into various applications. These models, such as Amazon SageMaker and AWS Lambda, enable businesses to deploy and manage AI workloads without the need for extensive in-house expertise. With AWS’s scalable solutions, organizations can experiment with AI technologies, accelerate model training, and deploy solutions at a global scale—all while maintaining cost efficiency.

In a world where AI capabilities are becoming a key differentiator, leveraging AWS’s infrastructure and managed services positions businesses to stay ahead of the curve, driving innovation and delivering cutting-edge solutions to their customers.

Leveraging AWS Infrastructure for AI Advancements

AWS provides a powerful infrastructure that accelerates artificial intelligence (AI) advancements by offering scalable and high-performance computing resources. With services like Amazon EC2, businesses gain access to flexible computing power that can be tailored to the specific needs of their AI workloads. For more intensive tasks, AWS’s GPU-based instances, such as the P4 and P5 series, deliver the high computational capability required for training complex machine learning models.

Moreover, AWS supports a range of AI tools and frameworks, including TensorFlow, PyTorch, and Apache MXNet, which streamline the development of AI applications. AWS SageMaker further simplifies the process by providing a comprehensive suite of tools for building, training, and deploying machine learning models. With robust data storage solutions like Amazon S3 and Amazon RDS, AWS ensures efficient data management and security, making it easier for businesses to drive AI innovation and achieve technological goals.

Scalable AI Solutions: AWS’s Role in Innovation

AWS is at the forefront of scaling AI solutions, particularly with its robust suite of foundation models. These pre-trained models, available through services like Amazon SageMaker, provide organizations with a powerful starting point for their AI projects. By leveraging these advanced foundation models, businesses can avoid the complexity of building models from scratch and instead focus on customizing and fine-tuning them to meet specific needs. This approach significantly accelerates the development process, allowing for faster deployment and more efficient utilization of resources.

Additionally, AWS’s elastic computing infrastructure ensures that these AI models can scale effortlessly. Whether your application requires intensive training or real-time inference, AWS’s scalable compute resources, including EC2 and SageMaker, can handle varying workloads with ease. This flexibility not only optimizes performance but also reduces operational costs. With AWS’s foundation models and scalable infrastructure, organizations can drive innovation, stay competitive, and rapidly adapt to the evolving demands of the AI landscape.

Streamlining AI Operations with AWS Managed Services

Streamlining AI Operations with AWS Managed Services

Efficient AI operations hinge on the ability to seamlessly manage complex infrastructures, and AWS Managed Services are pivotal in this process. By offloading routine maintenance tasks and infrastructure management to AWS, businesses can focus on developing and deploying AI models rather than grappling with the intricacies of underlying infrastructure. AWS Managed Services provides automated patching, monitoring, and backups, ensuring that your AI systems run smoothly and securely without requiring constant oversight. This allows data scientists and engineers to devote their expertise to refining algorithms and enhancing model performance.

Furthermore, AWS Managed Services facilitate scalability and flexibility, crucial for AI applications that often experience variable workloads. With on-demand resource scaling, AWS ensures that your AI projects have access to the necessary computational power and storage as needed, optimizing performance and cost-efficiency. By leveraging these managed services, organizations can achieve faster deployment cycles, reduced operational overhead, and improved system reliability, thereby accelerating their AI innovation and gaining a competitive edge in the market.

Optimizing Cloud Management for Enhanced AI Performance

Cloud-native application development is a pivotal approach for optimizing cloud management and boosting AI performance. Unlike traditional applications, cloud-native apps are designed specifically to leverage cloud environments’ inherent flexibility, scalability, and resilience. This method involves building applications as a collection of microservices, which are loosely coupled and can be developed, deployed, and scaled independently. By adopting cloud-native principles, organizations can achieve greater agility, enabling rapid iteration and deployment of AI models and services.

In the context of AI, cloud-native development allows for seamless integration with various cloud-based AI tools and services. For instance, AWS provides a suite of managed services and APIs that can be easily incorporated into cloud-native applications. This integration ensures that AI solutions can scale effortlessly to handle varying workloads and deliver optimal performance. Additionally, cloud-native applications benefit from automated scaling, high availability, and robust disaster recovery, which are crucial for maintaining the performance and reliability of AI-driven applications in a dynamic cloud environment.

Future Trends: AWS and the Evolution of AI Technologies

AWS is at the forefront of AI innovation, driving the future of AI technologies with its robust foundational models. These models, such as Amazon SageMaker and AWS Deep Learning AMIs, provide the building blocks for developing advanced AI applications. By offering pre-trained models and frameworks, AWS enables developers to accelerate their AI projects without starting from scratch. This streamlined approach not only reduces time-to-market but also enhances the accuracy and efficiency of AI solutions.

Looking ahead, AWS’s foundational models are set to play a crucial role in the evolution of AI. The integration of these models with emerging technologies, like quantum computing and advanced data analytics, promises to unlock new possibilities in AI research and application. As AWS continues to innovate, its foundational models will evolve to support increasingly complex and sophisticated AI technologies, driving advancements across various industries and setting new standards for what AI can achieve.

Revolutionizing Logistics with IoT: Tracking, Fleet Management, and Automation

The logistics industry is undergoing a significant transformation, driven by the seamless integration of Internet of Things (IoT) technologies. With its ability to enable real-time tracking, advanced fleet management, and warehouse automation, IoT is revolutionizing the way logistics operations are carried out. By enhancing efficiency, transparency, and operational effectiveness, IoT is reshaping the logistics landscape. In this blog, we will delve into the profound impact of IoT on tracking, fleet management, and automation, and how it is revolutionizing the logistics industry.

Real-Time Tracking: Boosting Supply Chain Transparency

One of the most significant advancements IoT brings to logistics is real-time tracking. Traditionally, monitoring the movement of goods through the supply chain was a challenge fraught with delays and inaccuracies. IoT-enabled GPS devices and sensors have changed that, providing unprecedented visibility into the location and status of shipments.

IoT sensors can track vehicles, shipments, and assets in real-time, delivering precise information about their position and movement. This real-time data helps logistics companies and their clients to gain better transparency across the supply chain. For instance, if a shipment is delayed or rerouted, stakeholders are immediately notified, allowing them to make informed decisions and manage customer expectations.

Additionally, IoT sensors can monitor environmental conditions such as temperature, humidity, and vibration during transit. This is especially crucial for sensitive goods like pharmaceuticals, food, or electronics, where deviations from optimal conditions can lead to spoilage or damage. By setting parameters and receiving alerts if conditions fall outside these ranges, companies can take corrective actions swiftly, ensuring the integrity of their shipments.

Fleet Management: Maximizing Efficiency with IoT

Effective fleet management is critical to the success of logistics operations. IoT technologies offer powerful tools to enhance fleet management by providing real-time data and predictive insights.

Route Optimization: IoT devices collect real-time information on traffic, weather, and road conditions. By analyzing this data, logistics companies can dynamically adjust routes for faster and more efficient deliveries. This not only reduces transit times but also lowers fuel consumption and operational costs. For example, if a traffic jam or severe weather is detected on a planned route, the system can suggest an alternative path, avoiding potential delays.

Predictive Maintenance: Another significant advantage of IoT in fleet management is predictive maintenance. Sensors installed in vehicles monitor performance and detect signs of wear and tear before they lead to breakdowns. By analyzing data on engine health, tire pressure, and other critical components, predictive maintenance systems can schedule repairs and replacements proactively. This approach minimizes unscheduled downtime, enhances safety, and extends the lifespan of vehicles, leading to cost savings and improved operational reliability.

Smart Warehousing: Automating Logistics Operations

Smart Warehousing: Automating Logistics Operations

Warehousing is a cornerstone of logistics, and IoT is driving a wave of automation and efficiency improvements in this area.

Smart Inventory Management: IoT-enabled sensors and RFID tags offer real-time tracking of inventory levels. This technology automates inventory management processes, such as reordering and stock updates, reducing the risk of stockouts and overstocking. With accurate and timely data on inventory levels, companies can ensure optimal stock levels and avoid costly disruptions in their supply chain.

Automation and Robotics: In modern warehouses, IoT-integrated robots and automated guided vehicles (AGVs) are revolutionizing operations. These systems can perform tasks like picking, sorting, and packing with high precision and speed. Automation reduces the reliance on manual labor, minimizes human errors, and increases overall efficiency. For example, AGVs can transport goods within a warehouse without human intervention, streamlining workflows and optimizing space utilization.

Predictive Maintenance: Reducing Costs and Downtime

Predictive maintenance is a game-changer in logistics, thanks to IoT’s ability to provide actionable insights and early warnings. By continuously monitoring the condition of equipment and vehicles, IoT systems can predict when maintenance is needed before failures occur.

This proactive approach to maintenance reduces the risk of unexpected breakdowns and associated downtime. Instead of waiting for equipment to fail, companies can schedule maintenance activities based on real-time data, ensuring that machinery operates smoothly and reliably. This not only enhances operational efficiency but also leads to significant cost savings by avoiding expensive emergency repairs and extending the lifespan of assets.

The Future of Logistics: IoT’s Growing Influence

As IoT technologies continue to evolve, their influence on the logistics industry will only grow. The integration of advanced analytics, artificial intelligence, and machine learning with IoT data will enable even more sophisticated solutions and insights.

Shortly, we can expect further advancements in predictive analytics, allowing logistics companies to anticipate and respond to potential disruptions with greater accuracy. Enhanced automation and robotics will continue to streamline warehouse operations, while more connected and intelligent fleet management systems will optimize transportation networks.

Moreover, the increasing adoption of IoT in logistics will drive greater collaboration and data sharing among stakeholders, leading to more integrated and efficient supply chains. Companies that embrace these innovations will gain a competitive edge, improving their operational performance and delivering superior service to their customers.

In a nutshell, the integration of IoT technologies into logistics is transforming the industry in profound ways. Real-time tracking, advanced fleet management, and smart warehousing are just a few examples of how IoT is enhancing efficiency, transparency, and operational effectiveness. As these technologies continue to evolve, they will drive further innovations and improvements, shaping the future of logistics and supply chain management. Embracing IoT’s potential will be crucial for companies seeking to stay ahead in a rapidly changing market and deliver exceptional value to their customers.

Fashion Forward: The Generative AI Revolution in E-commerce Retail

As the e-commerce industry evolves, retailers face new challenges and opportunities, making it crucial to integrate Generative AI into their business strategies. This transformative technology is revolutionizing both front-end customer interactions and back-end processes, from personalized search suggestions and AI-generated collections to localized product content. By embracing Generative AI, brands can offer highly personalized shopping experiences, enhance customer engagement, and drive innovation, ensuring they stay competitive in a rapidly changing market. In this blog, we delve into the profound impact of Generative AI on e-commerce, exploring its role in personalized shopping, virtual try-ons, predictive fashion trends, and the future of AI-powered business models.

Personalized Shopping Experiences: AI in eCommerce

Personalization has become the cornerstone of modern eCommerce, and AI is at the forefront of delivering this tailored shopping experience. Generative AI analyzes vast amounts of customer data, including browsing history, past purchases, and even social media behavior, to create individualized recommendations. These personalized suggestions ensure that customers are presented with products that align with their preferences, increasing the likelihood of a purchase.

Beyond product recommendations, AI is enhancing the overall shopping journey. AI-driven chatbots provide real-time assistance, answering customer queries and guiding them through the buying process. These virtual assistants are not just reactive; they proactively suggest products, offer discounts and provide personalized content based on the customer’s behavior. This level of customization not only improves the customer experience but also fosters brand loyalty, as shoppers feel that the brand understands and caters to their unique needs.

Moreover, visual search capabilities powered by AI allow customers to upload images and find similar products, revolutionizing the way people discover new items. This feature is particularly valuable in the fashion industry, where style and aesthetics play a crucial role in purchase decisions. By leveraging AI, eCommerce platforms are creating a more intuitive, engaging, and personalized shopping experience that resonates with today’s consumers.

Virtual Try-Ons: Redefining Customer Engagement

One of the most significant challenges in online fashion retail is the inability for customers to physically try on products before making a purchase. Generative AI is addressing this issue through virtual try-ons, a technology that allows customers to see how clothes, accessories, or makeup would look on them without leaving their homes.

Virtual try-ons use AI to analyze a customer’s body shape, skin tone, and facial features, creating a realistic representation of how products will fit and appear. This technology not only enhances customer confidence in their purchase decisions but also reduces return rates, as customers are more likely to choose items that suit them well.

For example, AI-powered virtual fitting rooms enable shoppers to mix and match outfits, experiment with different styles, and see the results in real time. This interactive experience bridges the gap between online and in-store shopping, offering the convenience of eCommerce with the assurance of a fitting room experience. By redefining customer engagement through virtual try-ons, AI is helping retailers create a more immersive and satisfying shopping experience.

Predictive Fashion Trends: How AI is Shaping the Future

The fashion industry is notoriously fast-paced, with trends emerging and fading at a rapid pace. Generative AI is playing a pivotal role in predicting these trends, enabling retailers to stay ahead of the curve. By analyzing data from various sources, including social media, fashion shows, and consumer behavior, AI can identify emerging trends before they become mainstream.

This predictive capability allows retailers to optimize their inventory, ensuring they stock the right products at the right time. For instance, AI can forecast the popularity of certain styles, colors, or materials, enabling brands to respond quickly to changing consumer preferences. This agility is crucial in the fashion industry, where timing is everything.

Moreover, AI can help designers and brands experiment with new ideas, generating innovative designs based on current trends. These AI-generated designs can inspire fashion lines, allowing brands to create unique collections that resonate with consumers. By leveraging AI’s predictive power, retailers can not only keep up with the latest trends but also set new ones, establishing themselves as industry leaders.

How AI is Revolutionizing the Retail Industry

The impact of AI on the retail industry extends beyond eCommerce and fashion. Across the entire retail landscape, AI is driving innovation, efficiency, and customer satisfaction. From supply chain optimization to in-store experiences, AI is revolutionizing how retailers operate and interact with customers.

In supply chain management, AI is improving efficiency by predicting demand, optimizing inventory levels, and reducing waste. Machine learning algorithms analyze sales data, seasonal trends, and external factors such as economic conditions to forecast demand accurately. This enables retailers to manage their inventory more effectively, ensuring that popular products are always in stock while minimizing excess inventory.

In physical stores, AI is enhancing the shopping experience through technologies such as smart mirrors, automated checkout systems, and personalized promotions. Smart mirrors, for instance, allow customers to try on clothes virtually, offering styling suggestions based on their preferences. Automated checkout systems use AI to streamline the payment process, reducing wait times and improving customer satisfaction.

Moreover, AI-driven personalization extends to in-store promotions, where customers receive tailored offers based on their purchase history and behavior. This level of customization ensures that promotions are relevant, increasing the likelihood of a sale and improving the overall shopping experience.

The Future of Retail: AI-Powered Business Models

As AI continues to evolve, it is paving the way for new business models in the retail industry. AI-powered platforms are enabling retailers to offer highly customized products and services, catering to the specific needs and preferences of individual customers.

One emerging business model is the concept of hyper-personalization, where AI tailors every aspect of the shopping experience to the individual customer. This goes beyond product recommendations and extends to personalized pricing, marketing, and even product design. By leveraging AI, retailers can create unique experiences for each customer, differentiating themselves in a competitive market.

Another promising development is the rise of AI-driven marketplaces, where algorithms match customers with products and services that best meet their needs. These platforms use AI to analyze customer data, predict preferences, and curate personalized shopping experiences. This not only enhances customer satisfaction but also allows smaller brands to reach their target audience more effectively.

Generative AI is transforming the e-commerce retail industry by seamlessly integrating online and offline experiences, ensuring customers receive personalized and consistent interactions across all channels. By tracking online behavior, AI tailors in-store experiences with customized recommendations and promotions, enhancing customer engagement and satisfaction. As AI continues to advance, it is not only driving innovation in personalized shopping and virtual try-ons but also predicting fashion trends and unlocking new business models. Retailers who embrace this technology will be at the forefront of a fashion-forward, customer-centric future in commerce.

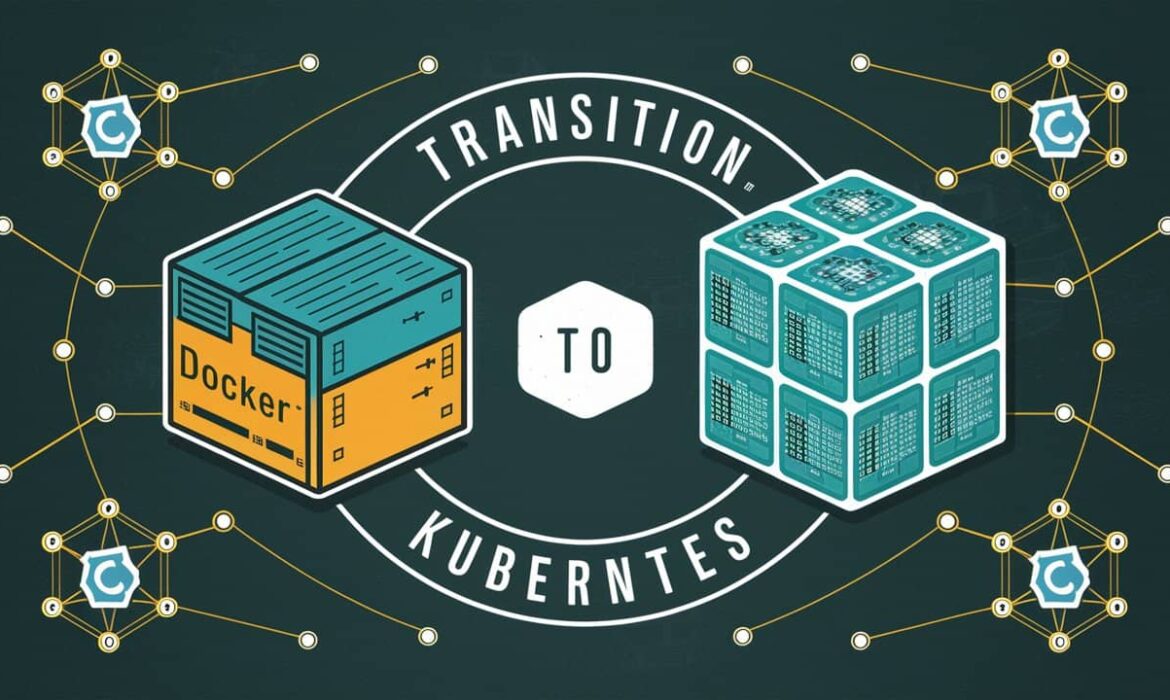

Supercharging AWS Cloud Operations: A Journey from Docker to Kubernetes

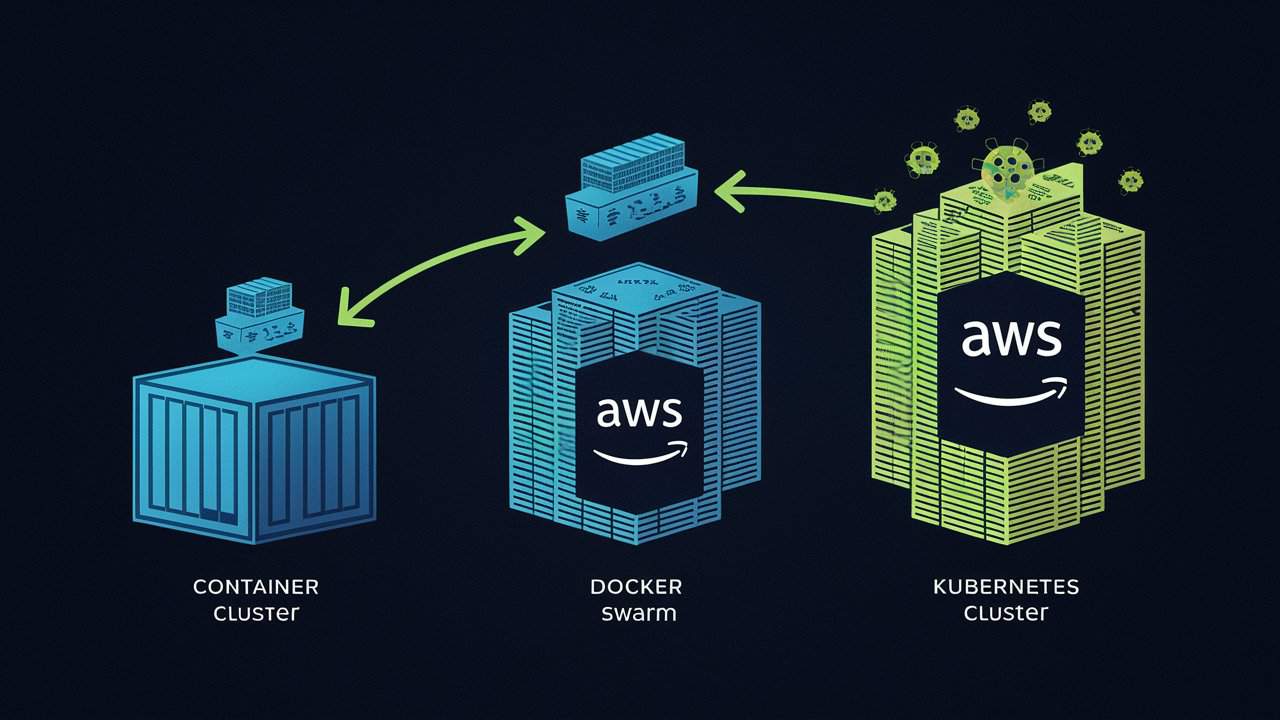

Understanding the Docker-Kubernetes-AWS Ecosystem

The Docker-Kubernetes-AWS ecosystem forms a robust foundation for modern cloud operations. Docker streamlines the packaging and deployment of applications by containerizing them, ensuring consistent environments across different stages of development. Kubernetes, an orchestration tool, takes this a step further by automating the deployment, scaling, and management of these containers, providing resilience and high availability through features like self-healing and load balancing.

Integrating this with AWS amplifies the ecosystem’s capabilities. AWS offers scalable infrastructure and managed services that complement Kubernetes’ automation, like Amazon EKS (Elastic Kubernetes Service), which simplifies Kubernetes deployment and management. This trio enables organizations to build, deploy, and manage applications more efficiently, leveraging Docker’s portability, Kubernetes’ orchestration, and AWS’s extensive cloud infrastructure. Together, they create a seamless, scalable, and resilient environment that is crucial for cloud-native applications.

Why is Kubernetes the Next Step After Docker on AWS?

After mastering Docker on AWS, Kubernetes emerged as the natural progression for scaling containerized applications. While Docker simplifies container management, Kubernetes takes it further by orchestrating these containers at scale, providing advanced features like automated scaling, self-healing, and seamless rollouts. This level of automation is essential for handling complex workloads in a dynamic cloud environment like AWS, where demands can shift rapidly.

Kubernetes also offers a significant advantage over Docker Swarm in terms of flexibility and resilience. With Kubernetes, you can deploy multi-region, multi-cloud applications effortlessly, ensuring high availability and fault tolerance. Its robust ecosystem, including Helm for package management and integrated CI/CD pipelines, streamlines cloud operations, reducing manual interventions and minimizing downtime. In an AWS environment, Kubernetes leverages services like Amazon EKS, enabling you to manage clusters with AWS-native tools, which enhances security, compliance, and cost efficiency.

In summary, Kubernetes on AWS empowers cloud operations teams with unparalleled control, scalability, and efficiency, making it the logical next step after Docker for enterprises aiming to optimize their cloud-native architectures.

Container Orchestration Simplified: How Kubernetes Enhances Docker Workloads

Container orchestration is pivotal for scaling and managing containerized applications, especially in cloud environments. Kubernetes, an open-source platform, simplifies this by automating the deployment, scaling, and management of Docker containers. It acts as a robust control plane, ensuring that containerized applications run seamlessly across clusters, regardless of underlying infrastructure.

In cloud operations, Kubernetes enhances Docker workloads by providing resilience, scalability, and efficient resource utilization. It automatically handles load balancing, self-healing, and rolling updates, ensuring minimal downtime and consistent application performance. By abstracting the complexity of managing multiple containers, Kubernetes allows cloud teams to focus on application development rather than infrastructure management.

Moreover, Kubernetes integrates smoothly with cloud services like AWS, GCP, and Azure, offering built-in tools for monitoring, logging, and security. This integration ensures that cloud-native applications can scale effortlessly, adapt to changing demands, and maintain high availability, making Kubernetes an indispensable tool for modern cloud operations.

Efficient Application Scaling for Cloud Developers with Docker, Kubernetes, and AWS

Efficient application scaling is vital for cloud developers, and integrating Docker, Kubernetes, and AWS offers a robust solution. Docker streamlines application deployment by encapsulating it in lightweight containers, ensuring consistent performance across different environments. These containers can be easily scaled up or down based on real-time demand, simplifying the management of varying workloads.

Kubernetes enhances this process by orchestrating these containers at scale. As an open-source platform, Kubernetes automates deployment, scaling, and operational management of containerized applications, allowing developers to concentrate on development rather than infrastructure. When paired with AWS, Kubernetes benefits from the cloud provider’s comprehensive ecosystem, including Elastic Kubernetes Service (EKS), which facilitates seamless cluster management.

AWS further supports scalable cloud operations with Auto Scaling and Elastic Load Balancing. These services automatically adjust resources to match traffic fluctuations, maintaining application responsiveness and optimizing cost efficiency. Together, Docker, Kubernetes, and AWS provide a cohesive framework for efficient, scalable cloud management.

Streamlined CI/CD Pipelines: Leveraging Kubernetes in Your Docker-Based AWS Environment

Streamlined CI/CD pipelines are essential for optimizing cloud operations, particularly when integrating Kubernetes with Docker in an AWS environment. Kubernetes automates the deployment, scaling, and management of Docker containers, making it easier to manage complex applications. This orchestration simplifies updates, enhances rollback capabilities, and minimizes downtime, ultimately boosting operational efficiency.

In AWS, combining Kubernetes with Docker leverages the full power of scalable, resilient infrastructure. Services like Amazon EKS (Elastic Kubernetes Service) manage Kubernetes clusters, allowing you to focus on application development rather than infrastructure maintenance. This integration fosters a more agile development process, accelerating time-to-market while ensuring high availability and performance. By aligning your CI/CD practices with these technologies, you achieve a more efficient and reliable cloud operation, meeting the demands of modern software delivery.

Optimizing Docker Swarm and Kubernetes on AWS: Key Takeaways

Optimizing Docker Swarm and Kubernetes on AWS can significantly enhance cloud operations, leading to more efficient resource utilization and streamlined deployments. Docker Swarm’s simplicity is a strong advantage for managing containerized applications. Leveraging AWS features such as Elastic Load Balancing (ELB) and Auto Scaling with Docker Swarm can ensure high availability and dynamic scaling. Utilizing AWS Fargate with Docker Swarm can further optimize operations by removing the need for managing underlying infrastructure, thereby reducing overhead and simplifying management.

On the other hand, Kubernetes provides more advanced orchestration capabilities and is ideal for complex, microservices-based applications. AWS EKS (Elastic Kubernetes Service) integrates seamlessly with Kubernetes, offering managed control plane operations, which simplifies cluster management and scales effortlessly with demand. To optimize Kubernetes on AWS, leverage AWS CloudWatch for monitoring and AWS IAM for fine-grained security controls. Combining Kubernetes’ robust orchestration with AWS’s scalable infrastructure ensures resilient, cost-effective, and highly available cloud operations.

Precision and Progress: Advancing Manufacturing with Generative AI

The manufacturing industry has evolved from manual craftsmanship in ancient times to the mechanized production of the Industrial Revolution, and further to the automation era of the 20th century. Despite these advancements, the industry has long faced complaints about inefficiencies, waste, high costs, and inconsistent quality. Workers also raised concerns about unsafe conditions and job displacement due to automation. The constant pursuit of innovation has driven the industry to seek solutions, culminating in today’s adoption of advanced technologies like AI.

Today, Generative AI is at the forefront of this evolution, bringing transformative changes to the industry. By leveraging algorithms that can generate designs, optimize processes, and predict outcomes, generative AI is revolutionizing how products are conceived and produced. In manufacturing, it enables the creation of complex, innovative designs that were previously impossible, while also streamlining production workflows. From reducing material waste to enhancing product quality, generative AI is not just a tool but a game-changer, driving the next wave of innovation and competitiveness in the manufacturing sector. The future of manufacturing is being shaped by these intelligent, adaptive technologies.

Enhancing Design Accuracy with AI-Driven Modeling

AI-driven modeling is revolutionizing design accuracy in the manufacturing industry, setting new standards that surpass traditional methods. Conventional design processes often involve extensive trial and error, which can be time-consuming and costly. In contrast, generative AI algorithms analyze vast datasets to create precise models, optimizing for factors such as material efficiency, durability, and cost. These algorithms can simulate thousands of design variations, ensuring that the final product is not only innovative but also meets exact specifications. This high level of precision reduces errors and minimizes the need for costly revisions, resulting in products that perform reliably in real-world conditions.

Moreover, AI-driven modeling enables rapid prototyping and testing, significantly speeding up the design process. Engineers can quickly visualize complex designs, make real-time adjustments, and refine models based on immediate feedback. This iterative process allows for faster development cycles, enabling manufacturers to bring products to market more quickly. Additionally, the ability to explore a wider range of design possibilities encourages innovation, allowing manufacturers to create cutting-edge products that were once thought to be unachievable. By enhancing accuracy, reducing costs, and fostering creativity, AI-driven modeling is transforming the landscape of manufacturing design, making it more efficient and effective than ever before.

Streamlining Production Processes with Predictive Analytics

Streamlining production processes through predictive analytics represents a major leap in manufacturing efficiency. By harnessing data from various sources—such as real-time sensors, historical production records, and sophisticated machine learning algorithms—predictive analytics allows manufacturers to anticipate potential disruptions before they occur. This proactive approach means that maintenance can be performed before equipment failures happen, reducing unplanned downtime and ensuring smooth operations. As a result, overall production efficiency is significantly enhanced, with fewer interruptions and more consistent output.

In addition to optimizing maintenance, predictive analytics plays a crucial role in refining supply chain management. By delivering precise demand forecasts, it enables manufacturers to accurately align inventory levels with anticipated needs. This foresight helps in adjusting production schedules and managing stock more effectively, minimizing the risks of both overstocking and shortages. Consequently, manufacturers benefit from a more responsive and flexible production system that not only reduces costs but also boosts customer satisfaction. Embracing predictive analytics allows manufacturers to improve operational efficiency, cut waste, and maintain a competitive edge in a rapidly evolving industry.

Quality Control Redefined: AI Inspection and Optimization

Quality control has always been a critical aspect of manufacturing, but traditional methods often struggle with inconsistencies and inefficiencies. The introduction of AI inspection and optimization represents a paradigm shift in how quality is maintained. AI-powered systems utilize advanced machine learning algorithms to inspect products with unprecedented accuracy. By analyzing images and sensor data, these systems can detect defects, deviations, and anomalies that might elude human inspectors. This not only enhances the precision of quality checks but also speeds up the inspection process, reducing the likelihood of costly recalls and ensuring higher standards of product excellence.

Moreover, AI-driven quality control systems can continuously learn and adapt over time. As they process more data, they refine their algorithms to improve detection capabilities and predict potential issues before they arise. This proactive approach enables manufacturers to address problems at their source, preventing defects from reaching the final stages of production. By integrating AI with existing quality control processes, companies can achieve a level of consistency and reliability that was previously unattainable.

The benefits of AI in quality control extend beyond mere detection. Optimization algorithms can analyze production data to identify trends and patterns that might indicate underlying issues. This data-driven insight allows manufacturers to fine-tune their processes, enhance operational efficiency, and minimize waste. As AI continues to evolve, its role in redefining quality control promises to drive innovation and set new benchmarks for excellence in manufacturing.

Benefits of AI in Manufacturing

Cost Reduction

The integration of AI into manufacturing processes heralds a new era of efficiency and cost-effectiveness. One of the most compelling advantages is cost reduction. Leveraging AI for predictive maintenance, optimizing resource allocation, and employing generative design techniques enables manufacturers to achieve substantial savings. Predictive maintenance algorithms, for instance, preemptively identify equipment issues, which minimizes downtime and costly repairs. Additionally, AI-driven resource optimization ensures that materials and energy are used efficiently, further cutting operational expenses.

Improved Product Quality

Another pivotal benefit is improved product quality. AI-powered quality control systems set a new standard for precision and consistency in manufacturing. These advanced systems detect even the slightest deviations or defects, ensuring that each product meets rigorous quality standards. By reducing human error and variability, AI enhances overall product reliability and customer satisfaction. Manufacturers can confidently deliver products that not only meet but exceed customer expectations, thereby bolstering their reputation in competitive markets.

Increased Productivity

Furthermore, AI enhances productivity through smart automation and streamlined processes. Automated systems powered by AI algorithms handle complex tasks with speed and accuracy, resulting in increased production output without compromising quality. By optimizing workflows and eliminating bottlenecks, manufacturers achieve higher throughput rates and quicker turnaround times. This not only boosts operational efficiency but also allows businesses to meet growing demand effectively. In essence, AI transforms manufacturing into a more agile and responsive industry, capable of adapting swiftly to market dynamics while maintaining high standards of productivity and quality.

Revolutionizing Telecom Customer Support with Amazon Connect

In the telecom industry, customer interactions often revolve around technical support and billing inquiries—two areas that significantly impact customer satisfaction and loyalty. Managing these inquiries efficiently is no small feat, especially given the complexity and volume of requests that telecom companies handle daily. Enter Amazon Connect, a cloud-based contact center service that’s transforming how telecom providers manage these critical customer touchpoints. With Amazon Connect, technical support becomes more streamlined, and billing inquiries are resolved faster, ensuring that customers receive timely, accurate assistance without the typical frustrations associated with traditional call centers.

By leveraging Amazon Connect, telecom companies can offer a seamless experience where customers are quickly routed to the right resources, whether they need help troubleshooting a service issue or clarifying a billing statement. The integration of advanced features like automated call distribution, real-time analytics, and AI-driven customer service tools allows telecom providers to address customer needs efficiently and effectively. In an industry where customer experience is a key differentiator, Amazon Connect is proving to be an invaluable asset for telecom companies aiming to enhance their support services and maintain a competitive edge.

Leveraging AWS Services for Enhanced Telecom Solutions Support

Leveraging AWS services, telecom companies can significantly enhance their customer support solutions by integrating Amazon Connect with the broader AWS ecosystem. By using AWS Lambda, telecom providers can automate call flows, streamline customer interactions, and reduce response times. This real-time processing capability allows for dynamic and personalized customer experiences, crucial in today’s competitive telecom landscape.

Additionally, AWS offers a suite of tools like Amazon S3 and AWS CloudTrail that ensure data storage is secure and compliant with industry regulations. These services provide telecom companies with the scalability and reliability needed to manage large volumes of customer data while maintaining high service levels. By leveraging AWS, telecom providers can deliver more efficient, scalable, and secure support solutions, ultimately transforming their customer service operations.

Scaling Customer Support with Amazon Connect and AWS Cloud Solutions

In the telecommunications industry, it’s vital to adapt customer support to the increasing demands and expectations of consumers. Amazon Connect, when combined with AWS Cloud solutions, provides a reliable and flexible platform for telecom companies to address these needs. Leveraging AWS’s elasticity, telecom providers can easily adjust the capacity of their contact centers based on fluctuating call volumes without the expense of maintaining on-premises infrastructure. This adaptability ensures consistent, high-quality customer service, even during unexpected surges in demand, such as during outages or promotional events.

Furthermore, integrating AWS Cloud solutions with Amazon Connect allows telecom providers to enhance and expand their support operations effortlessly. AWS facilitates the rapid deployment of new features, the integration of third-party applications, and global expansion without the limitations typically associated with traditional systems. This scalability isn’t merely about managing more calls; it’s about modernizing customer support to meet current expectations. Telecommunication companies can introduce advanced AI-driven features, utilize real-time analytics, and seamlessly introduce new services across different regions while maintaining a unified and efficient customer support experience. This ability to scale and innovate is essential for maintaining competitiveness in the dynamic telecom industry.

AWS-Powered Analytics: Unlocking Insights for Telecom Customer Experience

By leveraging the analytical power of AWS, telecom companies can gain deep insights into their customer interactions. Through integrating Amazon Connect with AWS tools like Amazon QuickSight and AWS Glue, these providers can effectively analyze extensive customer data in real-time. This capability enables companies to spot emerging trends, track customer engagement metrics, and understand the underlying sentiments of each interaction, leading to more personalized and efficient support tailored to individual customer needs.

In addition, AWS analytics services help telecom companies convert data into actionable insights, boosting customer satisfaction and loyalty. By using predictive analytics driven by advanced AWS machine learning algorithms, companies can predict customer needs, reduce churn rates, and proactively tackle potential issues. This integration not only transforms customer service operations but also enhances overall efficiency, providing telecom enterprises with a significant competitive advantage in an ever-changing industry.

Transforming Customer Experiences with Amazon Connect and AWS Lambda

In today’s ever-evolving telecom industry, optimizing communication channels is essential for delivering top-notch customer service. Amazon Connect, a cloud-centric contact center platform, seamlessly merges with AWS Lambda to offer a highly efficient and responsive customer experience. AWS Lambda facilitates serverless computing, empowering telecom firms to execute code in direct response to Amazon Connect triggers without the need for server management. This integration streamlines operations, automates repetitive tasks, and ensures that customer interactions are promptly and accurately managed.

By harnessing AWS Lambda, telecom providers can elevate their communication strategies by implementing tailored workflows and real-time data processing. For example, Lambda functions can intelligently direct calls based on customer profiles or handle complex queries with minimal delay. This integration of Amazon Connect and AWS Lambda not only optimizes operational workflows but also enhances customer satisfaction through timely and personalized support.

Future-Proofing Telecom with Amazon Connect and AWS AI/ML Services

In the rapidly evolving telecom industry, staying ahead of customer expectations is crucial. Amazon Connect, combined with AWS AI/ML services, offers telecom companies the tools to future-proof their customer support operations. By integrating AI-driven solutions like AWS’s natural language processing and machine learning models, telecom providers can deliver highly personalized and predictive customer experiences. This not only improves satisfaction but also reduces operational costs by automating routine inquiries and streamlining complex interactions.

Furthermore, AWS’s scalable infrastructure ensures that telecom companies can seamlessly adapt to fluctuations in demand. Whether it’s handling a surge in customer queries during peak times or scaling down during quieter periods, Amazon Connect’s cloud-based architecture, powered by AWS, provides the flexibility telecom providers need. This adaptability, paired with AI and ML capabilities, positions telecom companies to meet the demands of tomorrow’s customers while maintaining efficient and cost-effective operations.

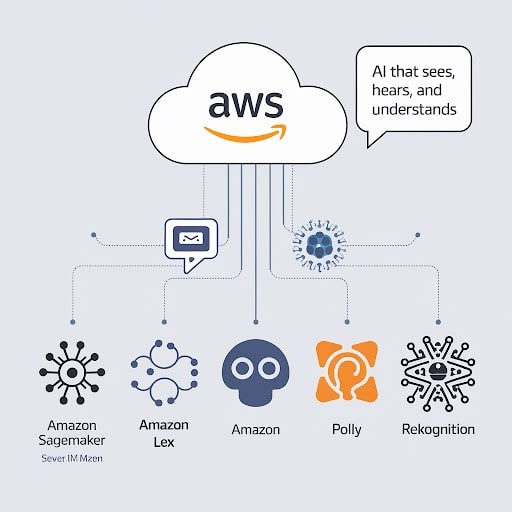

Differentiating AWS’s AI/ML Ecosystem: Amazon Bedrock vs Amazon SageMaker

Cloud service providers like Amazon Web Services (AWS) acknowledge the increasing demand for artificial intelligence and machine learning capabilities, consistently unveiling new offerings. AWS distinguishes itself with a wide array of AI and ML solutions, providing businesses a versatile toolkit for optimizing operations and driving innovation. Through AWS, businesses access advanced AI and ML solutions seamlessly, sidestepping infrastructure complexities and specialized expertise. This ongoing innovation, exemplified by services like Amazon Bedrock and Amazon SageMaker, ensures businesses maintain competitiveness in a rapidly evolving landscape. These platforms empower organizations to effectively leverage cutting-edge technologies, enhancing agility and efficiency in achieving objectives and remaining at the forefront of today’s dynamic business environment.

Amazon Bedrock

Amazon Bedrock, developed by Amazon Web Services (AWS), is an extensive ecosystem aimed at optimizing machine learning (ML) operations. Tailored to meet the distinct challenges of ML workflows, Bedrock offers a comprehensive suite of tools and services. It prioritizes model governance, monitoring, and workflow automation, ensuring compliance, reliability, and efficiency across the ML lifecycle. Through robust features, Bedrock enforces regulatory standards, tracks model performance metrics, and automates critical tasks like model deployment and scaling. By enhancing governance and monitoring capabilities while streamlining ML operations, Bedrock empowers organizations to deploy ML solutions faster and at scale, fostering confidence in their endeavors. It’s features in operations are:

Model Governance Bedrock prioritizes robust model governance, implementing strict compliance measures and data protection protocols to meet regulatory standards. By establishing reliable governance mechanisms, organizations can mitigate risks associated with ML deployments and uphold privacy regulations, fostering trust and accountability in the ML process.

Monitoring Capabilities Bedrock offers extensive monitoring capabilities, empowering organizations to track model performance metrics in real-time. This proactive approach enables timely detection of issues or anomalies, ensuring the reliability and accuracy of ML models throughout their lifecycle. Through diligent monitoring, organizations can promptly address deviations from expected outcomes, optimizing model effectiveness and driving superior results.

Workflow Automation Simplifying ML operations, Bedrock automates critical workflows, including model deployment and scaling. This automation not only saves time and resources but also enhances operational efficiency. By automating repetitive tasks, Bedrock enables organizations to deploy ML solutions faster and at scale, accelerating innovation and time-to-market while maintaining consistency and reliability in the ML workflow.

In summary, Amazon Bedrock offers a comprehensive suite of features tailored to enhance ML operations, covering model governance, monitoring, and workflow automation. By leveraging Bedrock’s capabilities, organizations can ensure regulatory compliance, drive efficiency, and foster innovation in their ML initiatives with confidence.

Amazon Sagemaker

Amazon SageMaker, a fully managed service offered by AWS, simplifies the end-to-end process of creating, training, and deploying machine learning models at scale. It achieves this by integrating pre-built algorithms and frameworks into a unified platform, easing the burden of managing infrastructure setups. With its scalable infrastructure, SageMaker caters to diverse workloads, ensuring flexibility and efficiency for organizations. Managed notebooks within SageMaker enable seamless collaboration among data scientists and developers, facilitating faster model development cycles. Additionally, SageMaker automates various aspects of the machine learning workflow, streamlining processes and boosting productivity. Through its comprehensive features, SageMaker empowers businesses to deploy models rapidly and efficiently, fostering innovation and driving significant advancements in artificial intelligence applications.

Integrated Platform SageMaker consolidates the entire ML lifecycle within a unified environment, from model development and training to deployment. This integration reduces complexity and facilitates collaboration between data scientists and developers, leading to faster development cycles and promoting innovation and efficiency.

Elastic Infrastructure SageMaker’s automatic scaling capabilities adapt seamlessly to fluctuating workloads and data volumes, optimizing resource usage and cost-effectiveness. This eliminates the need for manual management of infrastructure, enabling organizations to confidently tackle large-scale ML tasks while ensuring smooth operations regardless of demand variations.

Rich Library of Algorithms and Frameworks SageMaker offers a comprehensive collection of pre-built algorithms and frameworks, simplifying the process of model development. Data scientists leverage these resources to accelerate experimentation, refine models, and gain insights, thereby speeding up the development process and enabling rapid deployment of ML solutions.

Managed Notebooks SageMaker’s managed notebooks provide a secure and reproducible environment for collaboration between data scientists and developers. With support for popular languages and version control features, these notebooks enhance productivity, streamline development workflows, and contribute to successful outcomes in ML initiatives.

Data protection and Security Requirements

Amazon Bedrock and Amazon SageMaker prioritize data protection and security throughout the ML lifecycle. They employ encryption protocols for data both in transit and at rest, ensuring sensitive information remains secure. Stringent access controls are enforced to regulate data access, bolstered by regular security audits to uphold compliance with industry standards. Moreover, both platforms offer features tailored to meet regulatory requirements, facilitating seamless adherence to data protection regulations. Integration with other AWS services further enhances security measures, fostering a comprehensive ecosystem where confidentiality, integrity, and availability of data are paramount. This commitment to robust security practices instills trust among users, enabling them to confidently leverage these platforms for their ML initiatives while mitigating risks associated with data breaches or unauthorized access.

Business Benefits of the Ecosystem

Amazon SageMaker Ecosystem As the foundational pillar of AWS’s AI/ML arsenal, SageMaker offers a holistic approach to simplifying the ML lifecycle. Its unified platform provides a seamless experience, boasting scalable infrastructure that eliminates the complexities of managing resources. With a rich selection of pre-packaged algorithms and frameworks, developers can expedite model development, focusing more on innovation rather than infrastructure. The integration of managed notebooks facilitates collaborative environments, fostering synergy between data scientists and developers throughout the ML journey. From initial ideation to model deployment, SageMaker ensures efficiency and scalability, empowering organizations to drive transformative AI initiatives with ease.

Amazon Bedrock Ecosystem Engineered to refine and optimize ML operations, the Bedrock ecosystem complements SageMaker by addressing critical aspects of model governance, monitoring, and optimization. It offers a comprehensive suite of tools meticulously crafted to meet the demands of enterprise-grade ML deployments. Through robust governance mechanisms, Bedrock ensures compliance with regulatory standards and organizational policies, mitigating risks associated with AI deployments. Its monitoring capabilities enable continuous tracking of model performance metrics, providing actionable insights for optimization and improvement. By automating key workflows, Bedrock streamlines operational processes, enhancing efficiency and scalability. This ecosystem’s holistic approach ensures the deployment of resilient and scalable ML solutions, driving sustained innovation and value creation.

The ecosystem provides businesses with streamlined ML processes, leveraging SageMaker’s unified platform for efficient model development and deployment. With scalable infrastructure and pre-packaged algorithms, organizations can accelerate innovation while managing resources effectively. Managed notebooks foster collaboration, enhancing team productivity. Meanwhile, the Bedrock ecosystem ensures compliance, monitors model performance, and automates workflows, optimizing operational efficiency. Together, these components empower businesses to deploy resilient and scalable ML solutions, driving continuous improvement and value creation.

Within the AWS ecosystem, Amazon Bedrock and Amazon SageMaker offer robust AI/ML capabilities catering to different needs. Bedrock excels in quickly integrating advanced AI features with minimal customization, thanks to its pre-configured models and streamlined workflows. Conversely, SageMaker is designed for cases requiring deep customization and model fine-tuning, providing granular control over the training process. While Bedrock prioritizes convenience and speed, SageMaker emphasizes flexibility and control, albeit with more setup and management requirements. Ultimately, the choice between Bedrock and SageMaker depends on project-specific needs, balancing the urgency for rapid deployment against the necessity for customization and control.

2024 Technology Industry Outlook : The Return of Growth with new advancements

In the backdrop of 2024, humanity stands at the brink of an epochal era propelled by the inexorable march of technological advancement. Across fields ranging from artificial intelligence and biotechnology to renewable energy, pioneering breakthroughs are reshaping the bedrock of our society. Amidst this whirlwind of innovation, society grapples with a dynamic terrain where challenges intertwine with opportunities. This pivotal moment marks a transformative nexus of potentials, primed to redefine entire sectors and revolutionize human experiences. Whether it’s the radical transformation of healthcare delivery or the streamlining of business operations, the profound reverberations of these advancements echo through every aspect of life. As humanity ventures into the forefront of technological exploration, witnessing the nascent stages of tomorrow’s achievements sprouting, there lies the potential for them to thrive and profoundly reshape the world in remarkable ways.

The technology industry has encountered disruption, regulatory complexities, and ethical quandaries in its journey. Yet, from these challenges arise invaluable insights and prospects for advancement. The rapid pace of innovation often outstrips regulatory frameworks, sparking debates around data privacy, cybersecurity, and ethical technology use. Additionally, the industry grapples with the perpetual struggle of talent acquisition and retention amidst soaring demand. However, within these trials lie abundant opportunities for progress. Advancements in artificial intelligence, blockchain, and quantum computing hold the potential to reshape industries and improve efficiency. Collaborative endeavors between governments, academia, and industry stakeholders can foster an innovation-friendly environment while addressing societal concerns. By embracing these prospects and navigating challenges with resilience, the technology sector stands poised for sustained growth and positive transformation.

Emerging Trends: Driving Growth in 2024

The technological landscape of 2024 is teeming with emerging trends set to exert profound influence across diverse sectors. One such trend gaining significant traction is the pervasive adoption of artificial intelligence (AI) and machine learning (ML) applications. These technologies are revolutionizing industries by streamlining processes through automation, empowering decision-making with predictive analytics, and delivering personalized user experiences. For instance, within healthcare, AI-driven diagnostic systems analyze vast datasets and medical images to aid in disease identification and treatment planning, thus enhancing overall patient outcomes.

Another notable trend shaping the technological horizon is the ascendancy of blockchain technology. Initially conceived for cryptocurrencies, blockchain’s decentralized and immutable architecture is now being harnessed across a spectrum of industries including finance, supply chain management, and healthcare. Through blockchain-based smart contracts, transactions are automated and secured, thus reducing costs and combating fraudulent activities prevalent in financial and supply chain operations.

Furthermore, the Internet of Things (IoT) continues its upward trajectory, facilitating seamless connectivity between devices and systems for real-time data exchange. This interconnectedness fosters smarter decision-making, heightened operational efficiency, and enriched customer experiences. In the agricultural sector, IoT sensors monitor environmental variables and crop health, optimizing irrigation schedules and ultimately bolstering agricultural yields.

Additionally, advancements in biotechnology are catalyzing innovations with far-reaching implications for healthcare, agriculture, and environmental conservation. CRISPR gene-editing technology, for instance, holds immense promise for treating genetic disorders, engineering resilient crop varieties, and addressing challenges posed by climate change.

Revolutionizing Industries: Impact of Advanced Technologies

The integration of advanced technologies is catalyzing a paradigm shift across industries, fundamentally altering business models, operational frameworks, and customer interactions. In manufacturing, the adoption of automation and robotics is revolutionizing production processes, driving down operational costs, and elevating product quality standards. Notably, companies like Tesla are leveraging extensive automation within their Gigafactories to ramp up production of electric vehicles, thereby maximizing output while minimizing costs.

In the realm of retail, e-commerce platforms are leveraging AI algorithms to deliver personalized product recommendations and enhance customer engagement. The recommendation engine deployed by retail giants like Amazon analyzes user preferences and past purchase behavior to tailor product suggestions, thereby augmenting sales and fostering customer satisfaction.

Furthermore, advanced technologies are reshaping the financial services sector, with fintech startups disrupting traditional banking and investment practices. Platforms such as LendingClub are leveraging AI algorithms to evaluate credit risk and facilitate peer-to-peer lending, offering alternative financial solutions to borrowers. The convergence of emerging technologies is driving innovation, unlocking new avenues for growth, and reshaping industries in profound ways. Organizations that embrace these advancements and adapt their strategies accordingly are poised to thrive in the dynamic technological landscape of 2024 and beyond.

Innovations Driving Growth: Breakthroughs and Developments

In 2024, groundbreaking innovations are propelling significant growth across various sectors, marking a transformative era of technological progress. Quantum computing stands out as a monumental breakthrough, revolutionizing industries such as finance, healthcare, cybersecurity, and logistics with its unprecedented data processing capabilities. These quantum computers are poised to tackle complex problems previously deemed unsolvable, paving the way for novel opportunities and increased efficiencies.

Moreover, advanced renewable energy technologies are driving growth in response to the urgent need for climate change mitigation. Innovations in solar, wind, and energy storage solutions are reshaping the energy landscape by reducing reliance on fossil fuels and fostering sustainable development. Not only do these advancements address environmental concerns, but they also stimulate new markets and create employment opportunities, laying the foundation for a brighter and more sustainable future.

Challenges Ahead: Navigating Obstacles in the Path to Progress

As we march into the future, there are formidable challenges awaiting us on the path to progress. One such obstacle is the ethical implications of emerging technologies. As artificial intelligence, biotechnology, and other innovations advance, ethical dilemmas surrounding privacy, security, and the responsible use of these technologies become increasingly complex. Striking a balance between innovation and ethical considerations will require careful navigation and robust regulatory frameworks.

Additionally, there are challenges related to workforce displacement and reskilling in the face of automation and technological disruption. As automation becomes more prevalent across industries, there is a growing concern about job displacement and the need for upskilling or reskilling the workforce to adapt to new roles and technologies. Ensuring a smooth transition for displaced workers and equipping them with the skills needed for the jobs of the future will be crucial for maintaining societal stability and fostering inclusive growth.

Moreover, global challenges such as climate change and resource depletion continue to loom large, necessitating innovative solutions and concerted international efforts. Adapting to the impacts of climate change, transitioning to sustainable energy sources, and mitigating environmental degradation will require collaborative action and innovative approaches from governments, businesses, and civil society alike. Despite these challenges, navigating the obstacles on the path to progress with resilience, foresight, and cooperation holds the promise of a brighter and more sustainable future for generations to come.

The Role of Regulation: Balancing Innovation and Responsibility

Regulation serves as the cornerstone of industry dynamics, orchestrating a delicate balance between innovation and accountability. Its primary objective lies in guiding the ethical development and deployment of emerging technologies, thus mitigating risks and ensuring transparency. By delineating clear guidelines, regulators cultivate an ecosystem conducive to innovation while concurrently protecting the interests of consumers, society, and the environment. This equilibrium is pivotal in preserving trust, nurturing sustainable growth, and fortifying the welfare of individuals and communities amidst the swift currents of technological advancement. In essence, effective regulation acts as a safeguard, steering industries towards responsible practices while fostering a culture of innovation.

Embracing the Era of Technological Renaissance

The Technological Renaissance marks a monumental shift towards unprecedented innovation in every facet of human existence. From artificial intelligence to blockchain, biotechnology, and renewable energy, these transformative technologies are reshaping societal norms and unlocking vast possibilities. As humanity strides towards heightened interconnectedness and efficiency, boundaries between the physical and digital realms blur, propelled by advancements in data analytics and automation. This convergence of innovation not only offers solutions to previously insurmountable challenges but also has the potential to revolutionize traditional practices, especially in healthcare and sustainability.

Yet, embracing this renaissance entails more than mere adaptation; it demands a steadfast commitment to ethical considerations and responsible innovation. As society traverses this transformative era, embracing the potential of these advancements can unlock unparalleled opportunities for growth, progress, and societal betterment, laying the groundwork for a brighter and more sustainable future.

Automated Document Summarization through NLP and LLM: A Comprehensive Exploration

Summarization, fundamentally, is the skill of condensing abundant information into a brief and meaningful format. In a data-saturated world, the capacity to distill extensive texts into concise yet comprehensive summaries is crucial for effective communication and decision-making. Whether dealing with research papers, news articles, or business reports, summarization is invaluable for saving time and improving information clarity. The ability to streamline information in any document provides a distinct advantage, emphasizing brevity and to-the-point presentation.

In our fast-paced digital age, where information overload is a common challenge, the need for efficient methods to process and distill vast amounts of data is more critical than ever. One groundbreaking solution to this challenge is automated document summarization, a transformative technique leveraging the power of Natural Language Processing (NLP) and Large Language Models (LLMs). In this blog, we’ll explore the methods, significance, and potential impact of automated document summarization.

Document Summarization Mechanism

Automated document summarization employs Natural Language Processing (NLP) algorithms to analyze and extract key information from a text. This mechanism involves identifying significant sentences, phrases, or concepts, considering factors like frequency and importance. Techniques may include extractive methods, selecting and arranging existing content, or abstractive methods, generating concise summaries by understanding and rephrasing information. These algorithms enhance efficiency by condensing large volumes of text while preserving essential meaning, facilitating quick comprehension and decision-making.

The Automated Summarization Process

1. Data Preprocessing

Before delving into summarization, the raw data undergoes preprocessing. This involves cleaning and organizing the text to ensure optimal input for the NLP and LLM Model. Removing irrelevant information, formatting, and handling special characters are integral steps in preparing the data.

2. Input Encoding

The prepared data is then encoded to create a numerical representation that the LLM can comprehend. This encoding step is crucial for translating textual information into a format suitable for the model’s processing.

3. Summarization Model Application

Once encoded, the data is fed into the LLM, which utilizes its pre-trained knowledge to identify key information, understand context, and generate concise summaries. This step involves the model predicting the most relevant and informative content based on the given input.

4. Output Decoding

The generated summary is decoded back into human-readable text for presentation. This step ensures that the summarization output is coherent, grammatically sound, and effectively conveys the essence of the original document.

Methods for Document Summarization

Extractive Document Summarization using Large Language Models (LLMs) involves the identification and extraction of key sentences or phrases from a document to form a concise summary. LLMs leverage advanced natural language processing techniques to analyze the document’s content, considering factors such as importance, relevance, and coherence. By selecting and assembling these extractive components, the model generates a summary that preserves the essential information from the original document. This method provides a computationally efficient approach for summarization, particularly when dealing with extensive texts, and benefits from the contextual understanding and linguistic nuances captured by LLMs.

Abstractive Document Summarization using Natural Language Processing (NLP) involves generating concise summaries that go beyond simple extractions. NLP models analyze the document’s content, comprehend context, and create original, coherent summaries. This technique allows for a more flexible and creative representation of information, summarizing complex ideas and details. Despite challenges such as potential content modification, abstractive summarization with NLP enhances the overall readability and informativeness of the summary, making it a valuable tool for condensing diverse and intricate textual content.

Multi-Level Summarization

Primarily a contemporary approach, the combination of extractive and abstractive summarization proves advantageous for succinct texts. However, when confronted with input texts exceeding the model’s token limit, the necessity for adopting multi-level summarization becomes evident. This method incorporates a variety of techniques, encompassing both extractive and abstractive methods, to effectively condense longer texts by applying multiple layers of summarization processes. Within this section, we delve into the exploration of two distinct multi-level summarization techniques: extractive-abstractive summarization and abstractive-abstractive summarization.

Extractive-Abstractive Summarization combines two stages to create a comprehensive summary. Initially, it generates an extractive summary of the text, capturing key information. Subsequently, an abstractive summarization system is employed to refine this extractive summary, aiming to make it more concise and informative. This dual-stage process enhances the overall accuracy of the summarization, surpassing the capabilities of extractive methods in isolation. By integrating both extractive and abstractive approaches, the method ensures a more nuanced and detailed summary, ultimately providing a richer understanding of the content. This innovative technique demonstrates the synergistic benefits of leveraging both extractive and abstractive methods in the summarization process.

Abstractive-Extractive Summarization technique combines elements of both approaches, extracting key information from the document while also generating novel, concise content. This method leverages natural language processing to identify salient points for extraction and employs abstractive techniques to enhance the summary’s creativity and coherence. By integrating extractive and abstractive elements, this approach aims to produce summaries that are both informative and linguistically nuanced, offering a balanced synthesis of existing and novel content from the source document.

Comparing Techniques