Transforming Retail: Generative AI and IoT in Supply Chain

The Synergy of Generative AI and IoT in Retail

IoT enhances efficiency and personalization by delivering real-time data on inventory, shipments, and equipment, allowing for accurate tracking, predictive maintenance, and automated inventory management. Generative AI further amplifies this by analyzing extensive data to generate actionable insights, optimizing customer interactions with advanced chat support, and forecasting trends.

Together, they streamline operations, reduce costs, and improve customer experiences. IoT ensures that retailers have up-to-date information, while Generative AI leverages this data to enhance decision-making and personalize service, driving greater operational efficiency and customer satisfaction.

Revolutionize Customer Support in Retail with Amazon Connect

By leveraging its advanced cloud-based technology, which integrates seamlessly with IoT, generative AI, and conversational AI, Amazon Connect empowers retailers to offer an exceptional customer experience through real-time data collection and analysis. IoT devices capture and relay critical information about customer interactions, purchase history, and system performance. This data allows for proactive problem resolution, enabling retailers to address issues before they impact the customer experience, and to personalize interactions based on real-time insights.

Generative AI and conversational AI further enhance customer support by providing intelligent, context-aware responses. Generative AI can create tailored solutions and suggestions based on customer inquiries, while conversational AI enables natural language processing for more effective communication through chatbots and voice systems. This technology ensures that customers receive prompt, accurate, and personalized assistance, whether they are seeking product information, tracking orders, or resolving issues. By automating routine tasks and delivering targeted support, Amazon Connect transforms retail customer service into a more responsive, efficient, and customer-centric operation, setting a new standard in the industry.

Avoiding Downtime and Delays: How AI Solves Supply Chain Problems

AI significantly enhances supply chain efficiency by improving predictive maintenance and reducing operational disruptions. Traditional maintenance often leads to unplanned downtime due to unforeseen equipment failures. AI changes this by using IoT sensors and data analytics to continuously monitor equipment, predicting potential issues before they arise. This enables timely maintenance and minimizes unexpected delays.

Additionally, AI optimizes inventory and resource management by forecasting equipment needs and potential failures. This allows businesses to schedule maintenance during off-peak times and manage spare parts inventory more effectively. By addressing maintenance proactively, AI reduces operational interruptions and avoids the costs associated with unplanned downtime. Overall, AI-driven predictive maintenance ensures a more reliable and efficient supply chain, tackling key challenges and driving smoother operations.

AI-Enhanced Real-Time Tracking and Visibility in Supply Chains

AI-enhanced real-time tracking and visibility in supply chains represent a significant advancement in logistics and inventory management. By integrating generative AI with IoT technologies, companies can achieve unprecedented levels of insight and efficiency throughout their supply chain operations. IoT devices collect real-time data on inventory levels, shipment statuses, and equipment performance. This data is then processed by generative AI algorithms, which analyze vast amounts of information to provide actionable insights and predictive analytics.

Generative AI enhances traditional IoT capabilities by not only monitoring current conditions but also forecasting potential issues and trends. For example, AI can predict delays based on historical data and current conditions, allowing companies to take preemptive actions to mitigate disruptions. Additionally, AI-driven analytics help optimize inventory levels, improving stock management and reducing waste. With real-time visibility and advanced predictive capabilities, businesses can enhance decision-making, streamline operations, and improve overall efficiency in their supply chains. This integrated approach sets a new standard for operational excellence, providing a competitive edge in the fast-paced world of supply chain management.

AI’s Impact on Shopping: Transforming the Retail Experience

1. Personalized Product Suggestions:

In e-commerce, AI enhances the shopping experience by offering tailored product suggestions. When a customer searches for clothing, AI analyzes their preferences and browsing history to recommend complementary items. For example, if a customer looks for a dress, AI might suggest matching accessories or alternative styles, helping them discover relevant products and improving their overall shopping experience.

2. AI-Powered Virtual Fitting Rooms:

AI-powered virtual fitting rooms are revolutionizing online shopping by allowing customers to create digital avatars that match their body size and skin tone. These avatars enable users to try on various clothing sizes and styles virtually, reducing uncertainty and return rates. This technology enhances customer satisfaction by helping shoppers make better choices and enjoy a more accurate fitting experience. Many platforms are now adopting these features to improve their online retail services.

Adapting to the Future of Retail in Supply Chain

To stay competitive, retail supply chains must embrace technologies like IoT, AI, and machine learning. IoT enables real-time tracking and monitoring, optimizing inventory management and logistics, while AI-driven analytics improve forecasting and operational efficiency. These advancements help retailers meet customer demands by ensuring timely deliveries and minimizing stockouts.

Additionally, future-ready supply chains require enhanced collaboration and agility. Strengthening partnerships with suppliers and logistics providers through data-driven insights allows for better synchronization and responsiveness to market changes. This approach leads to more effective inventory management and a more adaptable supply chain, crucial for delivering exceptional customer experiences in a dynamic retail landscape.

Best Practices and Trends in Machine Learning for Product Engineering

Understanding the Intersection of Machine Learning and Product Engineering

Machine learning (ML) and product engineering are converging in transformative ways, revolutionizing traditional methodologies. At the intersection of these fields, AI-driven machine learning is automating complex tasks, optimizing processes, and enhancing decision-making. Product engineering, once heavily reliant on manual analysis and design, now leverages ML algorithms to predict outcomes, identify patterns, and improve efficiency. This synergy enables engineers to create more innovative, reliable, and cost-effective products.

For example, in the automotive industry, ML is utilized to enhance the engineering of self-driving cars. Traditional product engineering methods struggled with the vast array of data from sensors and cameras. By integrating machine learning, engineers can now process this data in real-time, allowing the vehicle to make split-second decisions. This not only improves the safety and functionality of self-driving cars but also accelerates development cycles, ensuring that advancements reach the market faster.

Current Trends in AI Applications for Product Development

1. Ethical AI:

Ethical AI focuses on ensuring that artificial intelligence systems operate within moral and legal boundaries. As AI becomes more integrated into product development, it’s crucial to address issues related to bias, fairness, and transparency. Ethical AI aims to create systems that respect user privacy, provide equal treatment, and are accountable for their decisions. Implementing ethical guidelines helps in building trust with users and mitigating risks associated with unintended consequences of AI technologies.

2. Conversational AI:

Conversational AI utilizes natural language processing (NLP) and machine learning to enable machines to comprehend and interact with human language naturally. This technology underpins chatbots and virtual assistants, facilitating real-time, context-aware responses. In product development, conversational AI enhances customer support, optimizes user interactions, and delivers personalized recommendations, resulting in more engaging and intuitive user experiences.

3. Evolving AI Regulation:

Evolving AI regulations are shaping product development by establishing standards for the responsible use of artificial intelligence. As AI technology advances, regulatory frameworks are being updated to address emerging ethical concerns, such as data privacy, bias, and transparency. These regulations ensure that AI systems are developed and deployed with safety and accountability in mind. For product development, adhering to these evolving standards is crucial for navigating legal requirements, mitigating risks, and fostering ethical practices, ultimately helping companies build trustworthy and compliant AI-driven products.

4. Multimodality:

Multimodality involves combining various types of data inputs—such as text, voice, and visual information—to create more sophisticated and effective AI systems. By integrating these diverse data sources, multimodal AI can enhance user interactions, offering richer and more contextually aware experiences. For instance, a product might utilize both voice commands and visual recognition to provide more intuitive controls and feedback.

In product development, this approach leads to improved usability and functionality. The integration of multiple data forms allows for a more seamless and engaging user experience, as it caters to different interaction preferences. By leveraging multimodal AI, companies can develop products that are not only more responsive but also better aligned with the diverse needs and behaviors of their users.

5. Predictive AI Analytics:

Predictive AI analytics employs machine learning algorithms to examine historical data and predict future trends or behaviors. This approach enables the analysis of patterns and trends within past data to forecast what might happen in the future. In product development, predictive analytics is invaluable for anticipating user needs, refining product features, and making informed, data-driven decisions.

By harnessing these insights, companies can significantly enhance product performance and streamline development processes. Predictive analytics allows for proactive adjustments and improvements, leading to reduced costs and increased efficiency. Moreover, by addressing potential issues and seizing opportunities before they arise, companies can boost user satisfaction and deliver products that better meet customer expectations.

6. AI Chatbots:

In product development, chatbots play a crucial role by enhancing user interaction and streamlining support processes. By integrating chatbots into customer service systems, companies can offer instant, accurate responses to user queries, manage routine tasks, and provide 24/7 support. This automation not only speeds up response times but also improves service efficiency and personalization, allowing businesses to address user needs more effectively. Additionally, chatbots can gather valuable data on user preferences and issues, which can inform product improvements and development strategies.

Implementing Machine Learning for Enhanced Product Design

Implementing Machine Learning for Enhanced Product Design

Implementing machine learning in product design involves using advanced algorithms and data insights to enhance and innovate design processes. By analyzing large datasets, machine learning can reveal patterns and trends that improve design choices, automate tasks, and generate new ideas based on user feedback and usage data.

To integrate machine learning effectively, it’s essential to choose the right models for your design goals, ensure data quality, and work with cross-functional teams. Continuously refining these models based on real-world performance and user feedback will help achieve iterative improvements and maintain a competitive edge.

Future Outlook: The Role of Machine Learning in Product Innovation

The role of machine learning in future product innovation is poised for transformative change. As AI technologies advance, they will introduce more intelligent features that can adapt and respond to user behavior. Future innovations could lead to products that not only anticipate user needs but also adjust their functionalities dynamically, providing a more personalized and efficient experience.

Looking ahead, breakthroughs in AI, such as more advanced generative models and refined predictive analytics, will redefine product development. These advancements will allow companies to design products with enhanced capabilities and greater responsiveness to user preferences. By embracing these cutting-edge technologies, businesses will be well-positioned to push the boundaries of innovation, setting new standards and unlocking novel opportunities in their product offerings.

Top 5 Advantages of Generative AI in the Hospitality Industry

In the upgrading hospitality industry, staying competitive requires adopting advanced technologies. Leading this revolution are Generative AI (GenAI) and Amazon Web Services (AWS). By automating customer interactions and anticipating guest preferences, GenAI transforms how hotels, resorts, and other venues engage with guests, ensuring more personalized and memorable stays.

AWS enhances GenAI’s capabilities by providing scalable and secure infrastructure essential for sophisticated AI applications. Its comprehensive cloud services enable hospitality businesses to seamlessly manage large volumes of data, offering real-time analytics and valuable insights. This synergy between GenAI and AWS boosts operational efficiency and drives innovation, allowing the industry to swiftly adapt to changing market demands and guest needs. Together, these technologies redefine traditional practices, setting new standards for a dynamic, future-focused industry.

Customer Service through AI-Powered Chatbots: Leveraging Amazon Connect

In the competitive hospitality sector, delivering outstanding customer service is crucial. Generative AI (GenAI) chatbots, particularly when paired with Amazon Connect, are revolutionizing guest interactions. These AI-powered chatbots provide instant, personalized responses to various inquiries and booking requests, streamlining operations and improving service efficiency.

Amazon Connect, AWS’s cloud-based contact center service, integrates seamlessly with GenAI, managing high volumes of guest interactions with ease. This technology ensures guests receive timely and relevant assistance, reducing the load on human staff and enhancing the overall guest experience.

Real-world applications demonstrate the effectiveness of these technologies. For instance, hotels use GenAI chatbots for automated bookings, Hilton’s “Connie” offers personalized recommendations, and Airbnb’s chatbots enable swift issue resolution. By leveraging GenAI and Amazon Connect, hospitality businesses enhance guest satisfaction, build loyalty, and secure a competitive advantage.

Predictive Analytics for Revenue Optimization

Generative AI (GenAI) leverages predictive analytics to transform revenue optimization by analyzing historical data and market trends, GenAI forecasts demand with high accuracy. This capability allows businesses to anticipate fluctuations in guest volume, leading to more informed and strategic pricing decisions that maximize revenue potential while staying competitive.

In addition to optimizing pricing, GenAI enhances inventory management by predicting occupancy rates and booking patterns. This foresight helps prevent issues such as overbooking and underutilization, ensuring that resources are allocated efficiently.

The integration of predictive analytics into revenue management not only improves financial outcomes but also boosts operational efficiency. By making data-driven decisions, hospitality providers can better meet guest needs, streamline operations, and increase profitability, positioning themselves advantageously in a competitive market.

Advanced Security Measures

Generative AI (GenAI) significantly enhances security in the hospitality industry by supporting advanced and sophisticated protocols. Its ability to analyze large volumes of data in real-time allows for the detection of anomalies that could indicate potential security threats. This proactive capability ensures that unusual patterns are identified and addressed promptly, safeguarding sensitive guest information and reinforcing robust security measures.

In addition to anomaly detection, GenAI excels in automating threat responses, which is crucial for maintaining high security standards. When a potential threat is detected, GenAI swiftly activates predefined security protocols, minimizing response time and reducing the risk of escalation. This automation not only increases the efficiency of threat management but also decreases the chances of human error, further protecting guest data.

Moreover, GenAI supports compliance with stringent privacy regulations by continuously monitoring and adapting data security practices. It ensures that hospitality businesses adhere to legal standards and protect guest privacy effectively. This ongoing compliance support is vital for maintaining guest trust and avoiding legal and financial repercussions, ensuring that security measures are both reliable and compliant.

Sustainable Practices and Resource Optimization

Generative AI (GenAI) is instrumental in promoting sustainable practices within the hospitality sector by improving resource management. By analyzing energy and water usage data, GenAI helps businesses identify inefficiencies and implement targeted solutions. This data-driven approach allows hotels and resorts to manage resources more effectively, thereby lowering their environmental impact.

Beyond optimizing resource use, GenAI also reduces operational waste. By forecasting demand and managing inventory more precisely, GenAI minimizes excess supplies and waste. This proactive strategy ensures efficient resource use and less waste generation.

Moreover, integrating GenAI into sustainability initiatives supports green practices and aligns with global environmental objectives. By embracing these technologies, hospitality businesses can enhance their environmental responsibility, achieve cost savings, and attract eco-conscious guests. This dedication to sustainability benefits the environment and bolsters the business’s reputation and competitiveness.

Personalized Guest Experiences

Generative AI (GenAI) is transforming the hospitality industry by delivering highly personalized guest experiences. By analyzing vast amounts of data on guest preferences and behaviors, GenAI can generate tailored recommendations for dining, entertainment, and other services. This level of customization ensures that each guest feels uniquely valued and catered to, significantly enhancing their overall experience. The ability to provide such bespoke services not only delights guests but also sets a hotel or resort apart in a competitive market.

These personalized recommendations extend beyond basic services, delving into the nuanced needs and desires of each guest. For example, a GenAI system might suggest specific room settings, preferred dining times, or custom spa treatments based on previous stays or stated preferences. This deep understanding and anticipation of guest needs foster a more intimate and satisfying stay. As a result, guests are more likely to return, knowing that their unique preferences will be remembered and accommodated.

Furthermore, GenAI’s ability to continuously learn and adapt means that the personalization improves with each interaction. As more data is collected, the system becomes more adept at predicting and meeting guest needs, leading to even higher levels of satisfaction and loyalty. This ongoing enhancement of the guest experience not only drives repeat business but also encourages positive reviews and recommendations, thereby attracting new customers and sustaining growth in the hospitality industry.

Future Trends: The Evolution of GenAI in Hospitality

The future of Generative AI (GenAI) in hospitality promises further advancements in personalized guest experiences and operational efficiency. AI-driven chatbots to become even more sophisticated, offering deeper personalization and more intuitive interactions. Integration with IoT devices will enable seamless automation of guest services, enhancing comfort and convenience. Moreover, predictive analytics will refine revenue management and inventory control, while AI-powered security measures will improve guest safety. As GenAI evolves, it will revolutionize the hospitality industry, setting new standards for innovation and excellence.

Embracing Technology for Sustainable Growth

Embracing GenAI and Amazon Connect is essential for sustainable growth in the hospitality industry. These technologies streamline operations, enhance customer service, and provide personalized guest experiences. By automating routine tasks and managing high volumes of interactions efficiently, hospitality businesses can improve operational efficiency and guest satisfaction.

Integrating advanced technologies like GenAI and Amazon Connect positions hospitality providers at the forefront of innovation. This adoption not only meets the evolving expectations of tech-savvy guests but also fosters loyalty and a competitive edge, ensuring long-term success and growth.

The Role of Generative AI in IoT-Driven Retail Analytics

Retail industry is expanding alongside technological advancements. Two key technologies driving this evolution are Generative AI and the Internet of Things (IoT). When these technologies are merged, they provide unparalleled capabilities in retail analytics, enabling businesses to make informed decisions based on data and elevate customer experiences. Generative AI and IoT integration revolutionize the retail landscape by providing valuable insights into consumer behaviors and optimizing inventory management. Through this combination, retailers can implement personalized marketing strategies and enhance operational efficiency. This fusion of Generative AI and IoT stands at the forefront of empowering retailers to navigate the complex market landscape and stay ahead in a competitive industry.

Personalizing Customer Experiences with Generative AI

Generative AI transforms retail customer experiences by delivering highly personalized interactions. By analyzing data from IoT devices like smart shelves, AI gains insights into individual preferences and behaviors. For instance, smart shelves detect product interest, allowing AI to generate tailored recommendations and promotions. This personalization makes shopping more engaging, fostering customer loyalty.

Generative AI enhances real-time interactions through IoT-enabled devices like smart mirrors and kiosks, providing personalized styling tips, product information, and virtual try-ons. AI uses past interactions to ensure relevant suggestions, bridging online and offline shopping for a seamless experience.

Generative AI helps retailers create customized marketing campaigns based on individual customer data. Leveraging IoT insights, AI segments customers and designs targeted promotions, increasing marketing effectiveness and customer satisfaction. Integrating Generative AI and IoT in retail leads to a more personalized, efficient, and enjoyable shopping experience.

Optimizing Inventory Management through IoT and AI Integration

Integrating IoT and Generative AI optimizes inventory management by providing real-time stock visibility. IoT devices, like smart shelves, monitor inventory and send data to a central system. Generative AI analyzes this data to predict demand, considering sales trends and seasonal changes. This helps retailers maintain optimal stock levels, reducing overstock and stockouts.

Generative AI automates replenishment by analyzing IoT inventory data. When stock levels drop, AI automatically places orders with suppliers, ensuring timely restocking. This reduces manual intervention and human error, enhancing operational efficiency and meeting customer demands.

IoT and AI integration streamlines supply chain management. IoT devices provide real-time data on goods’ movement and condition. Generative AI identifies bottlenecks, predicts delays, and optimizes routes. This allows retailers to respond quickly to disruptions, ensuring timely product delivery and balanced inventory, ultimately improving customer satisfaction.

Enhancing Operational Efficiency in Retail

Generative AI and IoT enhance operational efficiency by streamlining supply chain management. IoT devices provide real-time data on the supply chain, which AI analyzes to identify bottlenecks and optimize routes. For instance, AI can suggest alternative routes or suppliers if delays occur, ensuring timely inventory replenishment and minimizing disruptions.

In inventory management, IoT devices track stock levels in real time while AI forecasts future demand based on historical data and trends. This helps maintain optimal inventory levels, reducing both overstock and stockouts. Automated systems can reorder products as needed, ensuring availability and improving efficiency.

Generative AI and IoT also aid in workforce optimization. By analyzing foot traffic and customer behavior, AI can predict busy periods and adjust staffing levels accordingly. This ensures adequate staffing during peak times, enhancing customer service and overall store efficiency. Together, these technologies streamline operations, cut costs, and improve the retail environment.

Advanced Analytics for Smarter Retail Strategies

Advanced analytics, driven by Generative AI and IoT, offers retailers valuable insights for refined strategies. By processing data from IoT devices—such as sales and foot traffic—AI reveals trends and patterns that guide strategic decisions. This helps retailers optimize product placements and tailor offerings to better meet customer demands.

Generative AI boosts predictive analytics by forecasting future trends from historical and real-time data. This foresight helps retailers manage inventory, adjust marketing strategies, and prevent overstocking or stockouts, ensuring they meet customer needs efficiently and profitably.

Additionally, advanced analytics allows for precise customer segmentation and targeting. Leveraging IoT and AI insights, retailers can design highly personalized marketing campaigns. This targeted approach enhances campaign relevance, increases engagement, and improves overall effectiveness, leading to greater customer satisfaction and loyalty.

Overcoming Challenges and Looking Ahead

Integrating Generative AI and IoT in retail presents data privacy and security challenges. Retailers must adopt strong cybersecurity practices and comply with regulations like GDPR to protect customer information. Measures such as encryption, secure access controls, and regular audits are vital to safeguarding data and maintaining customer trust.

Another challenge is integrating new technologies with existing systems. Retailers should invest in compatible solutions, provide thorough staff training, and work closely with technology providers. Effective management of these aspects will ensure seamless technology adoption, enabling advancements in personalization, inventory management, operational efficiency, and analytics.

In a nutshell, the integration of Generative AI and IoT is revolutionizing retail by boosting customer personalization and streamlining operations. This combination allows for customized interactions, automated inventory management, optimized supply chains, and advanced analytics. Despite challenges such as data privacy and system integration, addressing these issues through effective management and compliance can unlock the full potential of these technologies. Embracing Generative AI and IoT will help retailers innovate, enhance customer experiences, and thrive in a competitive market.

AWS Serverless Services: Transforming Modern Application Development

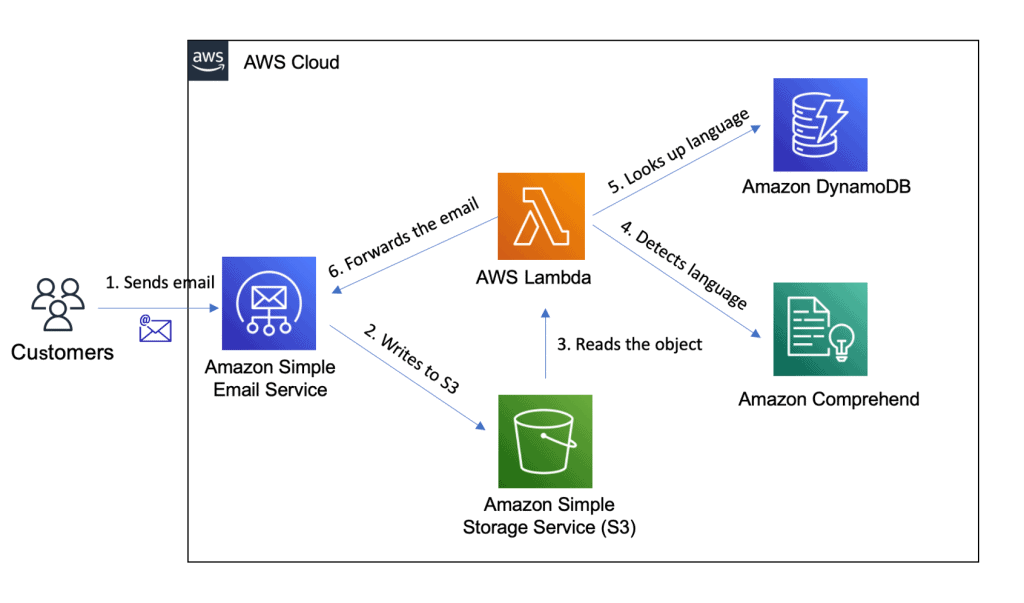

AWS provides a powerful suite of serverless services designed to simplify application development by removing the need for server management. Central to this suite is AWS Lambda, which allows you to execute code in response to events without provisioning or managing servers. Complementing Lambda is AWS API Gateway, which enables you to create, publish, and manage APIs, providing a seamless interface for serverless applications. For data management, AWS DynamoDB offers a fully managed, scalable NoSQL database that integrates effortlessly with other serverless components.

Additionally, AWS Step Functions orchestrates complex workflows by coordinating Lambda functions and other AWS services, while Amazon EventBridge facilitates real-time event routing, enabling applications to respond to changes and triggers efficiently. Together, these services create a robust framework for building highly scalable, efficient, and cost-effective applications, significantly reducing operational overhead and accelerating time-to-market.

AWS Serverless Service and Modern Development

Considering the contemporary tech landscape, the ability to quickly develop, deploy, and scale applications is essential. AWS Serverless services have revolutionized modern application development by providing a robust framework that allows developers to focus solely on writing code, free from the complexities of managing underlying infrastructure.

Services like AWS Lambda, AWS API Gateway, and AWS DynamoDB enable developers to build and run applications and services without dealing with servers. This shift simplifies the development process and unlocks new opportunities for innovation and agility. Serverless computing is designed to efficiently handle everything from microservices and backend systems to complex event-driven architectures, making it an ideal solution for modern applications that require flexibility and efficiency.

Essential AWS Serverless Components for Modern Applications

AWS provides a robust set of serverless tools essential for developing modern applications with efficiency and scalability. Central to this toolkit is AWS Lambda, a versatile compute service that runs code in response to events, replacing the need for server maintenance. Lambda’s ability to automatically scale with workload changes allows developers to create highly responsive, event-driven applications.

Complementing Lambda is Amazon API Gateway, which simplifies the creation, deployment, and management of secure APIs. It works seamlessly with AWS Lambda, facilitating the development of scalable serverless backends for web and mobile apps. Additionally, Amazon DynamoDB, a fully managed NoSQL database, offers rapid and flexible data storage capable of processing millions of requests per second. Together with services like S3 and Step Functions, these core AWS components enable developers to construct resilient, scalable, and cost-effective applications, driving forward innovation and operational efficiency in modern software development.

Best Services for Modern Developers with AWS

Best Services for Modern Developers with AWS

AWS Lambda is a highly versatile, serverless service designed for various applications. It shines in creating event-driven architectures where code execution is triggered by specific events, eliminating the need for manual initiation. For instance, in web and mobile application backends, Lambda efficiently manages tasks such as processing user uploads, handling API requests, and overseeing real-time interactions, all while offering scalable and cost-effective backend management without server maintenance.

Lambda is also adept at real-time data processing, allowing businesses to swiftly process and analyze data while scaling automatically to accommodate fluctuating data volumes. IT operations teams benefit from Lambda by automating routine tasks like backups, resource management, and infrastructure updates, which minimizes manual effort and improves reliability. It excels in event-driven computing, seamlessly processing events triggered by changes in other AWS services.

For businesses, particularly startups and those with variable workloads, Lambda provides a scalable, cost-effective solution for application development, efficiently managing traffic spikes through its pay-as-you-go pricing model.

Amazon API Gateway is a fully managed service, enabling developers to create, deploy, and manage APIs by defining endpoints and methods like GET, POST, PUT, and DELETE. It integrates with backend services, such as AWS Lambda, to process requests and manage traffic efficiently. API Gateway offers built-in security features, including API keys and authentication, and supports throttling to prevent abuse. It also integrates with AWS CloudWatch for monitoring performance and tracking usage.

By simplifying API management, it lets developers focus on application logic rather than infrastructure. It handles high traffic volumes automatically, supports various integrations, and provides a secure, centralized way to expose backend services for mobile and web applications. For enterprises with complex architectures, it efficiently manages and orchestrates multiple APIs, ensuring scalable and secure integration of backend services.

Amazon SNS (Simple Notification Service) is a key tool for delivering timely information and notifications to users or systems. To use SNS, a user first creates a topic, which serves as a central communication hub. This topic can have multiple subscribers, such as email addresses, phone numbers, or applications, that opt in to receive notifications. When an event occurs or there’s information to share, a message is published to the SNS topic, which then automatically delivers it to all subscribers through their chosen communication channels, like email, SMS, or HTTP endpoints.

SNS simplifies notification management for various users. Businesses use SNS to update customers on promotions and system alerts, boosting engagement with timely information. Developers and IT teams employ SNS to create event-driven applications and automate workflows. System administrators rely on it for performance alerts and quick issue resolution. Product and service providers use SNS to scale communications and deliver real-time updates, while emergency services leverage it to disseminate critical information quickly. Overall, SNS efficiently handles notifications and improves operational workflows.

Amazon DynamoDB is a NoSQL database service designed for high performance and scalability. It organizes data into tables and supports flexible querying. DynamoDB automatically handles scaling based on traffic, ensuring consistent performance even during spikes. It provides low-latency data access and integrates with other AWS services like Lambda for real-time data processing and analytics.

Startups and enterprises with applications requiring rapid, scalable data access benefit greatly from DynamoDB. E-commerce platforms, gaming companies, and IoT applications use DynamoDB to manage large volumes of user data and transactions efficiently. Its automatic scaling and low-latency performance help these businesses maintain responsiveness and reliability, crucial for enhancing user experience and operational efficiency.

Amazon S3 manages data using “buckets,” where users can upload, download, and organize files through a web interface or API. It automatically replicates data across multiple locations to ensure durability and high availability, protecting against potential data loss. Users can access their files from any internet-enabled device and customize permissions to maintain data security.

Amazon S3 provides significant advantages for businesses, developers, and organizations. Companies use S3 for scalable storage, reliable backups, and efficient data archiving. Developers depend on it for managing assets such as images and videos. Its robust durability and scalability support a variety of applications, from website hosting and data analytics to comprehensive data management, making it a versatile and valuable resource.

Future Trends: AWS Serverless and the Evolution of Modern Development

The future of AWS serverless computing is poised to further revolutionize modern development with advanced capabilities and greater integration. As serverless technology evolves, we can expect enhanced support for microservices architectures, allowing developers to build more modular and scalable applications. Innovations such as improved integration with machine learning and artificial intelligence services will enable more sophisticated and intelligent applications with minimal infrastructure management.

Additionally, the trend towards improved developer experience will continue, with better tooling and automation for deployment, monitoring, and debugging. Serverless services will likely incorporate more advanced features for security and compliance, streamlining regulatory requirements. As businesses increasingly adopt serverless architectures, the focus will shift towards optimizing costs and improving performance, reinforcing the role of serverless computing in driving agility and efficiency in software development.

In a nutshell, Startups and SMEs gain substantial benefits from AWS serverless services by simplifying application management and scaling. Serverless computing eliminates the need for server provisioning and maintenance, allowing these businesses to focus on developing and scaling their applications without the complexities of managing infrastructure. This streamlines operations and accelerates time-to-market for new features and products, providing a significant advantage for smaller companies looking to innovate quickly.

Additionally, the pay-as-you-go pricing model of AWS serverless services ensures that startups and SMEs only incur costs based on actual resource usage, avoiding expenses related to idle server time. Services like AWS Lambda, API Gateway, and DynamoDB offer automatic scaling and high availability, allowing businesses to handle varying workloads seamlessly and maintain a consistent user experience. This combination of cost efficiency, scalability, and reliability enables startups and SMEs to grow and adapt while optimizing their operational costs.

Digital Twins and IoT: Powering Smart Innovations

Imagine a bustling city where technology weaves an invisible web, responding to every citizen’s need with effortless precision. Traffic lights adjust intuitively, easing congestion before it forms. Energy grids anticipate demand spikes, seamlessly balancing supply to prevent outages. Public transport flows smoothly, routes adjusting in real-time to optimize commuter journeys. How is this possible? Meet the digital twins—virtual replicas of our physical world, meticulously crafted to mirror every detail.

Yet, these twins are not mere mirrors. They’re evolving with the help of Generative AI, transforming from static copies into dynamic problem-solvers. In high-tech factories, they predict machinery issues before they disrupt production, suggesting improvements that boost efficiency day by day. Across sprawling logistics networks, they forecast traffic and weather, guiding shipments to their destinations swiftly and on schedule.

This isn’t just progress; it’s a revolution. As Generative AI and digital twins integrate deeper into our lives, from city planning to healthcare, they’re reshaping industries with unprecedented innovation and operational prowess.

Integrating Generative AI with Digital Twins

Understanding the dynamics of IoT involves recognizing how integrating Generative AI with Digital Twins marks a profound transformation. Traditionally adept at real-time monitoring and simulation of physical assets or processes, Digital Twins now evolve into proactive decision-makers with the infusion of Generative AI.

Generative AI enhances Digital Twins by predicting behaviors and optimizing operations through comprehensive analysis of IoT-generated data. Imagine a manufacturing facility where Generative AI-powered Digital Twins not only replicate production lines but also predict maintenance needs and suggest process improvements autonomously. This collaboration significantly boosts operational efficiency by preemptively addressing challenges and optimizing resource usage.

This evolution represents more than just technical advancement; it marks a paradigm shift in how industries harness IoT capabilities. By leveraging Generative AI-enhanced Digital Twins, businesses can achieve unprecedented levels of efficiency and innovation. This advancement promises smarter, more adaptable systems within the IoT landscape, paving the way for transformative breakthroughs across diverse sectors.

Digital Twins in Action: Optimizing IoT Operations

Digital Twins are essential in IoT for enhancing operational efficiency across industries by replicating physical assets and systems, enabling real-time data simulation and insights. For example, in smart cities, Digital Twins adjust traffic flow using live IoT sensor data, optimizing urban mobility. In healthcare, they use predictive analytics to simulate patient scenarios, improving treatment and equipment maintenance. This proactive use minimizes downtime and maximizes resource efficiency, reducing costs and enhancing operations.

As IoT evolves and Digital Twins become more advanced, industries stand to benefit from significant innovations in efficiency and productivity. These integrated technologies promise transformative impacts, driving operational excellence across diverse sectors and paving the way for future advancements in IoT-driven solutions.

Smart Cities: Harmony Through Digital Twins

In the context of smart cities, digital twins revolutionize urban management by acting as virtual replicas of the city’s physical infrastructure. These digital counterparts meticulously simulate and monitor various aspects such as traffic patterns, energy usage, and public services like transportation. Powered by real-time data streamed from IoT sensors embedded throughout the city, digital twins facilitate agile decision-making and operational optimizations. For example, they can dynamically adjust traffic signal timings to alleviate congestion or reroute energy distribution to minimize waste. This proactive approach not only enhances urban efficiency and resource utilization but also improves the overall quality of life for residents. By integrating digital twins into urban planning and management, smart cities pave the way for sustainable growth and innovation, setting new standards for urban development in the digital age.

Predictive Insights: Leveraging Digital Twins in IoT Environments

In the world of IoT, Digital Twins emerge as powerful tools for predictive analytics, revolutionizing how industries optimize operations. These virtual counterparts of physical assets or processes continuously collect and analyze real-time data from IoT sensors. By harnessing this data, Digital Twins simulate various scenarios, predict future behaviors, and recommend proactive measures to enhance efficiency and performance.

Imagine a manufacturing plant where Digital Twins anticipate machinery failures before they occur, allowing for preemptive maintenance and minimizing production disruptions. In urban planning, Digital Twins can forecast traffic patterns based on historical and current data, facilitating better city management strategies. This predictive capability not only optimizes resource allocation but also fosters smarter decision-making across sectors, driving continuous improvement and innovation in IoT-enabled environments.

Applications of Digital Twins in IoT

Digital Twins are spearheading a transformative wave across industries within the IoT ecosystem. These virtual replicas of physical entities enable businesses to simulate real-world scenarios and optimize operations with unprecedented precision. In manufacturing, Digital Twins facilitate predictive maintenance, foreseeing equipment failures and optimizing production schedules to minimize downtime.

Moreover, in healthcare, Digital Twins simulate patient conditions to personalize treatment plans and predict health outcomes. Beyond these applications, Digital Twins are reshaping urban planning by modeling infrastructure performance and traffic flow, leading to more efficient city designs and management strategies. By leveraging Digital Twins, industries can achieve higher operational efficiency, reduced costs, and enhanced innovation, setting new benchmarks for performance and sustainability in the IoT era.

Implementing AI-driven Digital Twins in IoT

Implementing AI-driven Digital Twins in IoT environments presents both challenges and promising solutions. One major hurdle is the complexity of integrating diverse data streams from IoT devices into cohesive digital replicas. Ensuring seamless synchronization and real-time data processing is crucial for accurate predictive modeling and decision-making.

Moreover, maintaining data security and privacy while managing large volumes of sensitive information remains a critical concern. Solutions to these challenges include leveraging advanced AI algorithms for data fusion and anomaly detection, enhancing system interoperability through standardized protocols, and implementing robust cybersecurity measures to safeguard data integrity.

Successfully navigating these challenges enables businesses to harness the full potential of AI-driven Digital Twins. They empower organizations to achieve operational efficiencies, predictive insights, and innovation across sectors, shaping the future of IoT applications with intelligent and responsive systems.

IoT is undeniably the backbone of digital twins, forming the essential foundation upon which these advanced systems are built. Through the vast network of interconnected devices, IoT provides the real-time data necessary for creating accurate and dynamic digital replicas. This continuous data flow is crucial for the functionality of digital twins, as it allows for constant monitoring and updating of physical assets.

Advanced data analytics and AI utilize this data to generate actionable insights and predictive maintenance strategies. Cloud computing ensures seamless storage and processing of vast amounts of data, enabling real-time decision-making. By recognizing IoT as the core component, we acknowledge its pivotal role in harmonizing various technologies. This integration empowers digital twins to drive efficiency, innovation, and transformation across multiple industries. Thus, IoT stands as the cornerstone, unlocking the full potential of digital twin technology.

The Role of Amazon SageMaker in Advancing Generative AI

Amazon SageMaker is a powerful, cloud-based platform designed to make machine learning (ML) and generative AI accessible and efficient for developers. It streamlines the entire ML process, from creation and training to deployment of models, whether in the cloud, on embedded systems, or edge devices. SageMaker is a fully managed service, providing an integrated development environment (IDE) complete with a suite of tools like notebooks, debuggers, profilers, pipelines, and MLOps, facilitating scalable ML and generative AI model building and deployment.

Governance is simplified with easy access control and project transparency, ensuring secure and compliant workflows. Additionally, SageMaker offers robust tools for creating, fine-tuning, and deploying foundation models (FMs). It also provides access to hundreds of pretrained models, including publicly available FMs and generative AI models, which can be deployed with just a few clicks, making advanced ML and AI capabilities more accessible than ever. With foundation models, developers can leverage pretrained, highly sophisticated models, significantly reducing the time and resources needed for data preparation, model selection, and training. These models can be fine-tuned with specific datasets to meet unique requirements, allowing for quick and efficient customization. The streamlined development process enhances scalability and reliability, facilitating rapid deployment across cloud, edge, and embedded systems. This integration accelerates innovation and operational efficiency by providing advanced generative AI capabilities without the traditional complexity and effort.

The Role of Amazon SageMaker in Advancing Generative AI

Creating and Training Generative AI Models with SageMaker

Amazon SageMaker simplifies the creation and training of generative AI models with a robust suite of tools and services. Developers and data scientists can use Jupyter notebooks for data preparation and model prototyping, streamlining the development process. SageMaker supports a variety of generative AI techniques, including GANs and VAEs, facilitating experimentation with advanced methods.

The platform’s managed infrastructure optimizes training for scalability and speed, efficiently handling large datasets and complex computations. Distributed training capabilities further enhance performance, reducing the time required for model training. SageMaker also integrates debugging and profiling tools for real-time monitoring and fine-tuning, ensuring optimal model performance. Automated hyperparameter tuning accelerates the optimization process, improving model accuracy and efficiency. With SageMaker, organizations can leverage generative AI to innovate, enhance decision-making, and gain a competitive edge in their industries.

Deployment on the Cloud, Edge, and Embedded Systems

Amazon SageMaker enables versatile deployment of machine learning models across the cloud, edge, and embedded systems with one-click training and deployment. In the cloud, SageMaker ensures scalable, fault-tolerant deployments with managed infrastructure, freeing developers to focus on model performance.

For edge deployments, SageMaker supports real-time inference close to data sources, reducing latency and enabling swift decision-making in applications like IoT and industrial automation. This approach minimizes data transfer costs and enhances privacy by processing data locally.

SageMaker also caters to embedded systems, optimizing models for performance on resource-constrained devices. This capability is crucial for applications in healthcare, consumer electronics, and other sectors requiring efficient use of computational resources. With SageMaker, organizations can seamlessly deploy machine learning models across diverse environments, leveraging its flexibility to drive innovation and operational efficiency.

Integrated Tools for Efficient Model Development

Amazon SageMaker integrates a suite of tools designed to streamline and enhance the process of developing machine learning models. From data preparation to model deployment, SageMaker provides a cohesive environment that includes Jupyter notebooks for prototyping, debugging tools for real-time monitoring, and automated pipelines for seamless workflow management. These integrated tools simplify complex tasks, allowing developers and data scientists to focus more on refining model accuracy and less on managing infrastructure.

Furthermore, SageMaker offers built-in support for version control, collaboration, and model governance, ensuring consistency and transparency throughout the development lifecycle. This comprehensive approach not only accelerates model iteration and deployment but also promotes best practices in machine learning development, ultimately driving greater efficiency and innovation in AI-driven applications.

Ensuring Governance and Security in ML Workflows

Amazon SageMaker, in partnership with AWS, prioritizes stringent governance and security across machine learning (ML) processes. Leveraging AWS Identity and Access Management (IAM), SageMaker ensures precise control over who can access sensitive data and models, ensuring compliance with industry regulations and minimizing the risk of unauthorized access.

Additionally, SageMaker employs robust encryption protocols for data both at rest and in transit, safeguarding information integrity throughout the ML lifecycle. AWS Key Management Service (KMS) further enhances security by securely managing encryption keys, reinforcing the protection of ML operations and fostering a secure environment for deploying AI solutions.

Access to Pretrained Models and Foundation Models

Amazon SageMaker offers developers extensive access to pretrained models and foundation models (FMs), simplifying the integration of advanced AI capabilities into applications. Through SageMaker’s marketplace, developers can swiftly deploy pretrained models across diverse fields like natural language processing and computer vision. This streamlines the development process, accelerating the rollout of AI-driven solutions.

Additionally, SageMaker supports deployment of publicly available foundation models (FMs), which are large-scale models trained on extensive datasets. These FMs provide robust starting points for custom model development, allowing organizations to build upon established AI frameworks efficiently. By facilitating access to pretrained and foundation models, SageMaker empowers businesses to innovate rapidly and deploy sophisticated AI functionalities, driving progress across sectors such as healthcare, finance, and retail.

Conclusion

In a nutshell, Amazon SageMaker revolutionizes the machine learning and generative AI landscape by offering a comprehensive, cloud-based platform that simplifies the entire ML workflow. From creation and training to deployment, SageMaker provides robust tools and a fully managed environment, facilitating scalable and efficient model development. With integrated access to foundation and pretrained models, developers can quickly fine-tune and deploy sophisticated AI solutions across cloud, edge, and embedded systems. This streamlined process enhances innovation and operational efficiency, making advanced AI capabilities more accessible and driving progress across various industries.

Top 5 Strategies AWS Partners Use to Leverage AWS Infrastructure for Generative AI

Discover the transformative power of AWS in scaling generative AI. From groundbreaking networking advancements to revolutionary data center strategies, AWS is continuously enhancing its infrastructure. These innovations not only bolster the capability but also redefine the scalability of generative AI solutions. Embrace a future where AWS sets the benchmark in cloud-based technologies, empowering businesses to harness the full potential of artificial intelligence at unprecedented scales.

Generative artificial intelligence (AI) has rapidly revolutionized our world, enabling both individuals and enterprises to enhance decision-making, transform customer experiences, and foster creativity and innovation. However, the robust infrastructure supporting this powerful technology is the culmination of years of innovation. This sophisticated foundation allows generative AI to thrive, demonstrating that behind every breakthrough is a history of dedicated advancement and development. In this blog, we’ll explore the top five strategies AWS partners use to maximize AWS infrastructure for generative AI, explained in a way that anyone can understand.

1. Harnessing Low-Latency, High-Performance Networking

Generative AI models rely on massive amounts of data to learn and generate accurate predictions. Efficiently managing and processing this data requires advanced networking technologies that facilitate fast and reliable data movement across the cloud infrastructure. AWS partners leverage these specialized networking solutions to optimize performance and enhance the capabilities of their generative AI applications.

Elastic Fabric Adapter (EFA): EFA acts as a super-fast highway for data, enabling rapid data transfer by bypassing traditional network bottlenecks. When training generative AI models, which often involves processing large datasets and requiring frequent communication between multiple servers, EFA ensures data reaches its destination swiftly. This accelerated data movement is crucial for training complex AI models efficiently.

Scalable Reliable Datagram (SRD): SRD functions like a high-speed courier service for data packets, ensuring quick and reliable delivery. Working in tandem with EFA, SRD guarantees that data packets are not only transferred rapidly but also consistently, which is vital for maintaining the accuracy and performance of AI models. This combination of speed and reliability is essential for efficient model training and inference.

UltraCluster Networks: Imagine a vast network of interconnected supercomputers, each linked by ultra-fast and dependable cables. UltraCluster Networks are designed to support thousands of high-performance GPUs (graphics processing units), providing the computational power needed for training large-scale generative AI models. These networks offer ultra-low latency, meaning there is minimal delay in data transfer, significantly accelerating the training process and enabling faster model iterations.

2. Enhancing Energy Efficiency in Data Centers

Operating AI models demands substantial electrical power, which can be costly and environmentally impactful. AWS partners leverage AWS’s advanced data centers to boost energy efficiency and reduce their environmental footprint.

Innovative Cooling Solutions: Data centers house thousands of servers that generate considerable heat during operation. AWS employs advanced air and liquid cooling technologies to efficiently regulate server temperatures. Liquid cooling, resembling a car’s radiator system, effectively manages heat from high-power components, significantly lowering overall energy consumption.

Environmentally Responsible Construction: AWS prioritizes sustainability by constructing data centers with eco-friendly materials such as low-carbon concrete and steel. These materials not only diminish environmental impact during construction but also throughout the data centers’ operational life. This commitment helps AWS partners in cutting down carbon emissions and promoting environmental responsibility.

Simulation and Optimization: Prior to constructing a new data center, AWS conducts detailed computer simulations to predict and optimize its performance. This simulation-driven approach enables AWS to strategically place servers and cooling systems, maximizing operational efficiency. Similar to planning a building’s layout in a virtual environment, this ensures minimal energy usage and operational costs while maintaining optimal performance.

3. Ensuring Robust Security

Security is paramount for AWS partners, particularly when handling sensitive data essential for generative AI models. AWS implements a suite of advanced security measures to protect data and ensure compliance with stringent regulations.

AWS Nitro System: Serving as a vigilant guardian, the AWS Nitro System enforces rigorous isolation between customer workloads and AWS infrastructure. It features secure boot capabilities that prevent unauthorized software from executing on servers, thereby maintaining data integrity and confidentiality.

Nitro Enclaves: Within servers, Nitro Enclaves establish secure, isolated environments. Integrated with AWS Key Management Service (KMS), they encrypt data during processing to create a secure enclave for sensitive information, analogous to a digital safe, shielding it from exposure.

End-to-End Encryption: AWS employs robust encryption methods to secure data both at rest and in transit across its infrastructure. This comprehensive approach ensures data remains protected with stringent access controls, bolstering security against unauthorized access.

Compliance and Certifications: AWS adheres strictly to global security standards and holds numerous certifications, underscoring its commitment to data protection and regulatory compliance. These certifications reassure customers of AWS’s capability to safeguard their data with the highest security measures in place.

4. Harnessing Specialized AI Chips

Efficient operation of AI models relies heavily on specialized hardware. AWS partners harness purpose-built AI chips from AWS to optimize the performance and cost-effectiveness of their generative AI applications.

Strategic Collaborations: AWS collaborates closely with industry leaders such as NVIDIA and Intel to provide a diverse range of accelerators. These collaborations ensure that AWS partners have access to cutting-edge hardware tailored to their specific AI needs.

Continuous Innovation: AWS continues to lead in AI hardware development. For example, the upcoming Trainium2 chip promises even faster training speeds and improved energy efficiency. This ongoing innovation enables AWS partners to maintain a competitive advantage in the dynamic field of AI.

5. Enhancing Scalability in AI Infrastructure

Scalability is crucial for the success of generative AI applications, which often face unpredictable computing demands. AWS provides a versatile and resilient infrastructure that empowers partners to dynamically adjust resources to meet evolving requirements.

Auto Scaling: AWS’s Auto Scaling feature automatically adjusts computing resources based on application demand. When an AI workload requires more processing power, Auto Scaling efficiently adds servers to maintain optimal performance. This capability ensures consistent application responsiveness and efficiency, supporting uninterrupted operations.

Elastic Load Balancing (ELB): ELB evenly distributes incoming traffic across multiple servers to prevent any single server from becoming overwhelmed. By intelligently distributing workloads, ELB optimizes resource allocation, enhancing the overall performance and reliability of AI applications. This ensures seamless operation even during periods of peak usage.

Amazon S3 (Simple Storage Service): S3 offers scalable storage solutions for securely storing and retrieving large volumes of data as needed. Acting as a flexible digital repository, S3 effectively manages diverse data requirements, seamlessly supporting the storage and retrieval needs of AI applications.

Amazon EC2 (Elastic Compute Cloud): EC2 provides resizable compute capacity in the cloud, enabling partners to deploy and scale virtual servers rapidly in response to fluctuating workload demands. This flexibility is crucial for iterative model testing, experimentation, and efficient scaling of production environments, facilitating agile development and deployment of AI applications.

Conclusion

AWS Partner Companies are leveraging AWS’s advanced infrastructure to push the boundaries of what’s possible with generative AI. By utilizing low-latency networking, enhancing energy efficiency, ensuring robust security, leveraging specialized AI chips, and implementing scalable infrastructure, they can deliver high-performance, cost-effective, and secure AI solutions. These strategies not only help in achieving technological advancements but also ensure that AI applications are sustainable and accessible to a wide range of industries. As generative AI continues to evolve, AWS and its partners will remain at the forefront, driving innovation and transforming how we interact with technology.

Everything You Need To know About Amazon Connect

Amazon Web Services (AWS) has solidified its position as a leading cloud service provider, offering businesses a wide array of tools for communication, data management, and beyond. At the forefront of these services is Amazon Connect, a robust omnichannel contact center solution designed to elevate customer service and operational efficiency. Powered by AI, Amazon Connect equips businesses with scalable tools for managing customer interactions seamlessly across various channels. Discover how Amazon Connect revolutionizes customer engagement and enhances organizational productivity in this comprehensive guide.

What is AWS Connect?

Amazon Web Services (AWS) has firmly established itself as one of the most versatile and reliable cloud service providers globally. Over the years, AWS has expanded its offerings to provide businesses with a robust suite of tools for communication, data management, analytics, and more. Among these offerings is Amazon Connect, a comprehensive omnichannel contact center service that aims to provide businesses of all sizes with a powerful, easy-to-use cloud-based contact center that enhances customer service and operational efficiency.

Amazon Connect is a well designed AI-powered cloud contact center, to help companies meet and exceed evolving customer expectations. It offers omnichannel support, productivity tools for agents, and advanced analytics. With Amazon Connect, businesses can quickly set up a fully functional cloud contact center that scales effortlessly to accommodate millions of customers worldwide. This platform equips organizations with essential customer experience (CX) management tools, enabling them to deliver superior service and stay competitive in the dynamic market landscape.

Features for Enhanced Customer Engagement & Operational Efficiency

1. Unified Agent Workspace and Task Management

Amazon Connect consolidates agent-facing tools into a single workspace, enhancing efficiency with real-time case information and AI-driven recommendations. Agents manage tasks alongside calls and chats, ensuring streamlined workflows and effective customer service.

2. Advanced Customer Interaction Tools

Utilizing AI-powered chatbots and messaging, Amazon Connect supports seamless customer interactions across various channels like web chat, SMS, and third-party apps. Self-service capabilities empower customers while preserving context for agents, ensuring smooth transitions and personalized service.

3. Comprehensive Performance Monitoring with Contact Lens

Contact Lens monitors and improves contact quality and agent performance through conversational analytics. It analyzes customer interactions for sentiment, compliance, and trends, supporting agent evaluations with AI-driven insights and screen recordings.

4. Intelligent Forecasting and Resource Management

Machine learning-powered forecasting, capacity planning, and scheduling tools optimize staffing levels and agent productivity. They predict contact volumes, allocate resources efficiently, and generate flexible schedules aligned with service-level targets.

5. Enhanced Security and Customer Insights

Amazon Connect Voice ID provides real-time caller authentication and fraud detection, ensuring secure interactions. Customer Profiles integrate external CRM data to create comprehensive customer views, enabling agents to deliver personalized service and resolve issues effectively.

Recent Developments in Amazon Connect and AWS

Amazon Web Services (AWS) is driving customer interactions forward with the latest enhancements to Amazon Connect, elevating customer service and technological innovation. Here are the key updates that underscore significant progress in this area:

1. Amazon Q: AI-Powered Virtual Assistant

Amazon Q, an AI-powered virtual assistant, enhances customer interactions by engaging in natural conversations, generating personalized content, and utilizing data for efficient communication. With over 40 integrated connectors, Amazon Q customizes interactions and resolves issues specific to business needs.

2. Real-Time Barge-in Capability for Chat Support

Amazon Connect’s real-time barge-in capability allows managers to swiftly join ongoing customer service chats, enabling immediate assistance for complex issues and enhancing overall support efficiency.

3. Outbound Campaigns Voice Dialing API

The outbound campaigns voice dialing API supports large-scale voice outreach, boosting communication options and agent productivity within Amazon Connect.

4. Enhanced Granular Access Controls

Amazon Connect introduces enhanced access controls for historical metrics, ensuring secure and managed data access restricted to authorized personnel only.

5. API-Driven Contact Priority Updates

Businesses can now programmatically adjust contact priorities via an API, facilitating real-time queue management from custom dashboards to address urgent issues promptly.

6. Agent Proficiency-Based Routing

Amazon Connect enables routing based on agent proficiencies, directing customers to the most qualified agents for efficient and effective interactions.

7. Zero-ETL Analytics Data Lake

The Zero-ETL analytics data lake in Amazon Connect bypasses traditional ETL processes, making contact center data access and management simpler. This accelerates data analysis, enabling businesses to derive insights for faster, more informed decision-making swiftly.

8.No-Code UI Builder for Guided Experiences

Amazon Connect’s no-code UI builder empowers users to design step-by-step guides effortlessly through a user-friendly drag-and-drop interface. This feature not only streamlines the creation of interactive guides but also improves overall agent interface management by allowing for intuitive customization and updates without requiring extensive technical expertise.

9. Integrated In-App, Web, and Video Calling

In-app, web, and video calling integration in Amazon Connect facilitates personalized customer interactions directly through various platforms, improving engagement and convenience.

10. Generative AI-Powered Customer Profiling

Amazon Connect utilizes generative AI for rapid customer data mapping, delivering detailed profiles swiftly to enhance service delivery and customer satisfaction.

11. Efficient Two-Way SMS Communication

The two-way SMS integration enables efficient customer issue resolution via text, providing a convenient communication channel that enhances accessibility and responsiveness within Amazon Connect.

Why is it important to work with AWS Partner Company?

Partnering with an AWS Partner Company grants businesses access to certified experts with specialized skills essential for maximizing AWS’s vast array of services. These partners possess in-depth knowledge of best practices and architectural patterns, ensuring top-notch performance, robust security, and cost efficiency. By engaging with a certified partner, organizations can expedite their cloud adoption journey, reduce risks, and implement scalable, reliable solutions specifically designed to meet their unique requirements.

Moreover, AWS Partner Companies offer end-to-end support, covering everything from initial consultation to deployment and ongoing management. They utilize advanced tools and methodologies to streamline processes such as migration, integration, and optimization. Their strong relationship with AWS provides businesses with priority access to the latest features, updates, and technical support, enabling them to stay competitive in a swiftly evolving cloud environment.

Optimizing Generative AI: Harnessing Flexibility in Model Selection

In the dynamic world of artificial intelligence, the key to unlocking unparalleled performance and innovation lies in selecting the right models for generative AI applications. Among the leading models, OpenAI’s GPT-4 stands out for its exceptional ability in natural language understanding and generation. It is widely used for developing sophisticated chatbots, automating content creation, and performing complex language tasks. Google’s BERT, with its bidirectional training approach, excels in natural language processing tasks like question answering and language inference, providing deep contextual understanding.

Another noteworthy model is OpenAI’s DALL-E 2, which generates high-quality images from textual descriptions, opening up new possibilities in creative fields such as art and design. Google’s T5 model simplifies diverse NLP tasks by converting them into a unified text-to-text format, offering versatility in translation, summarization, and beyond. For real-time object detection, the YOLO model is highly regarded for its speed and accuracy, making it ideal for applications in image and video analysis. Understanding and selecting the appropriate model is crucial for optimizing generative AI solutions to meet specific needs effectively.

The Significance of Model Selection in Generative AI

The Significance of Model Selection in Generative AI

In the ever-evolving landscape of generative AI, a one-size-fits-all approach simply doesn’t cut it. For businesses eager to leverage AI’s potential, having a variety of models at their disposal is essential for several key reasons:

Drive Innovation

A diverse array of AI models ignites innovation. Each model brings unique strengths, enabling teams to tackle a wide range of problems and swiftly adapt to changing business needs and customer expectations.

Gain a Competitive Edge

Customizing AI applications for specific, niche requirements is crucial for standing out in the market. Whether it’s enhancing chat applications to answer questions or refining code to generate summaries, fine-tuning AI models can provide a significant competitive advantage.

Speed Up Market Entry

In today’s fast-paced business world, speed is critical. A broad selection of models can accelerate the development process, allowing businesses to roll out AI-powered solutions quickly. This rapid deployment is particularly vital in generative AI, where staying ahead with the latest innovations is key to maintaining a competitive edge.

Maintain Flexibility

With market conditions and business strategies constantly shifting, flexibility is paramount. Having access to various AI models allows businesses to pivot swiftly and effectively, adapting to new trends or strategic changes with agility and resilience.

Optimize Costs

Different AI models come with different cost implications. By choosing from a diverse set of models, businesses can select the most cost-effective options for each specific application. For example, in customer care, throughput and latency might be prioritized over accuracy, whereas, in research and development, precision is critical.

Reduce Risks

Counting solely on one AI model entails risks. A varied portfolio of models helps distribute risk, ensuring that businesses remain resilient even if one approach fails. This strategy provides alternative solutions, safeguarding against potential setbacks.

Ensure Regulatory Compliance

Navigating the evolving regulatory landscape for AI, with its focus on ethics and fairness, can be complex. Different models have different implications for compliance. A wide selection allows businesses to choose models that meet legal and ethical standards, ensuring they stay on the right side of regulations.

In summary, leveraging a spectrum of AI models not only drives innovation and competitiveness but also enhances flexibility, cost-efficiency, risk management, and regulatory compliance. For businesses looking to harness the full power of generative AI, variety isn’t just beneficial—it’s essential.

Choosing the Optimal AI Model

Navigating the expansive array of AI models can be daunting, but a strategic approach can streamline the selection process and lead to exceptional results. Here’s a methodical approach to overcoming the challenge of selecting the right AI model:

Define Your Specific Use Case

Begin by clearly defining the precise needs and objectives of your business application. Craft detailed prompts that capture the unique intricacies of your industry. This foundational step ensures that the AI model you choose aligns perfectly with your business goals and operational requirements.

Compile a Comprehensive List of Models

Evaluate a diverse range of AI models based on essential criteria such as size, accuracy, latency, and associated risks. Understanding the strengths and weaknesses of each model enables you to balance factors like precision and computational efficiency effectively.

Assess Model Attributes for Fit

Evaluate the scale of each AI model in relation to your specific use case. While larger models may offer extensive capabilities, smaller, specialized models can often deliver superior performance with faster processing times. Optimize your choice by selecting a model size that best suits your application’s unique demands.

Conduct Real-World Testing

Validate the performance of selected models under conditions that simulate real-world scenarios in your operational environment. Utilize recognized benchmarks and industry-specific datasets to assess output quality and reliability. Implement advanced techniques such as prompt engineering and iterative refinement to fine-tune the model for optimal performance.

Refine Choices Based on Cost and Deployment

After rigorous testing, refine your selection based on practical considerations such as return on investment, deployment feasibility, and operational costs. Consider additional benefits such as reduced latency or enhanced interpretability to maximize the overall value that the model brings to your organization.

Select the Model Offering Maximum Value

Make your final decision based on a balanced evaluation of performance, cost-effectiveness, and risk management. Choose the AI model that not only meets your specific use case requirements but also aligns seamlessly with your broader business strategy, ensuring it delivers maximum value and impact.

Following this structured approach will simplify the complexity of AI model selection and empower your organization to achieve significant business outcomes through advanced artificial intelligence solutions.

Conclusion